本文主要是介绍ffmpeg音视频同步(音视频写入顺序问题),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目前能看到的ffmpeg博客,在音视频录制同步时,都是音频和视频根据时间换算,交错写入文件。

现在问题来了,音频和视频在ffmpeg里面是两个通道,能否先写入所有录制的视频,再写入所有录制的音频呢,这个经过验证是可以的。

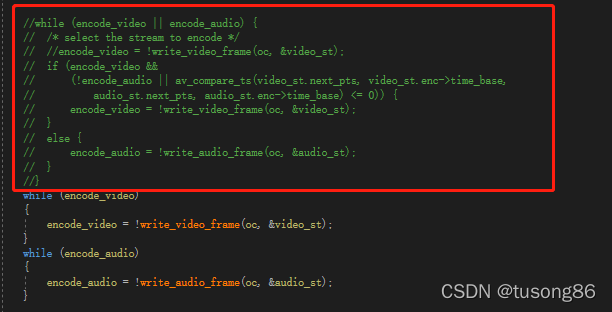

首先ffmpeg的doc目录下,有个doc/examples/muxing.c文件,对其进行改造,先写视频,再写音频,如下所示:

红色方框注释的部分,是原有的逻辑,下面的两个while循环分别用于写视频和音频,结果是ok的,音视频同步。

上面的例子太简单,而且数据量不大,为此本人单独写了一个例子,将桌面视频和系统声音录制同时录制下来,录制了五分钟,所有的桌面视频写入文件后,再开始写入录制的系统声音,结果也是好的。

在进行这项测试之前,本人按照正常方式,写了一篇音视频同步的博客。

ffmpeg录制桌面视频和系统内部声音(音视频同步)

本人在此基础上,进行了如下修改:

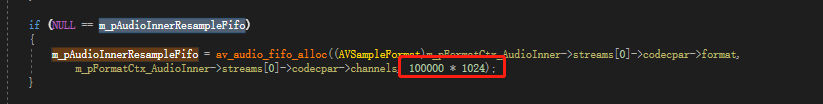

首先,录制五分钟期间,音频数据要在五分钟结束之后写,故本人特意创建一个比较大的缓冲区,用来装音频数据。

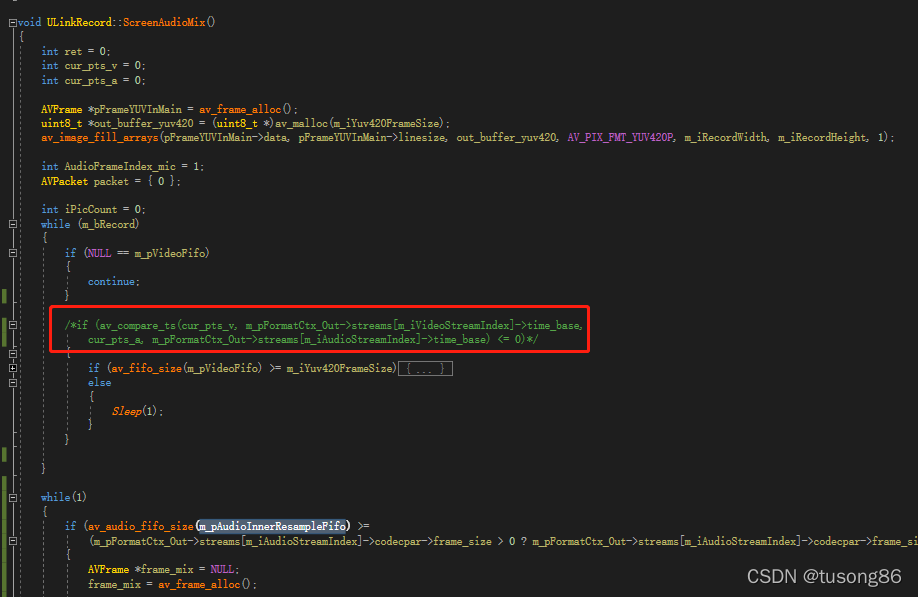

最后,在音视频混合时,本人先写完视频,再写音频,下面的两个循环中,第一个循环while(m_bRecord)里面写入的是视频文件,后面的while(1)写入的是音频文件,红色方框内的音视频时间自然不要理会。

下面给出修改的文件ULinkRecord.cpp的内容,其他文件就不需要再贴了,读者参考博客

ffmpeg录制桌面视频和系统内部声音(音视频同步)

#include "ULinkRecord.h"

#include "log/log.h"

#include "appfun/appfun.h"#include "CaptureScreen.h"typedef struct BufferSourceContext {const AVClass *bscclass;AVFifoBuffer *fifo;AVRational time_base; ///< time_base to set in the output linkAVRational frame_rate; ///< frame_rate to set in the output linkunsigned nb_failed_requests;unsigned warning_limit;/* video only */int w, h;enum AVPixelFormat pix_fmt;AVRational pixel_aspect;char *sws_param;AVBufferRef *hw_frames_ctx;/* audio only */int sample_rate;enum AVSampleFormat sample_fmt;int channels;uint64_t channel_layout;char *channel_layout_str;int got_format_from_params;int eof;

} BufferSourceContext;static char *dup_wchar_to_utf8(const wchar_t *w)

{char *s = NULL;int l = WideCharToMultiByte(CP_UTF8, 0, w, -1, 0, 0, 0, 0);s = (char *)av_malloc(l);if (s)WideCharToMultiByte(CP_UTF8, 0, w, -1, s, l, 0, 0);return s;

}/* just pick the highest supported samplerate */

static int select_sample_rate(const AVCodec *codec)

{const int *p;int best_samplerate = 0;if (!codec->supported_samplerates)return 44100;p = codec->supported_samplerates;while (*p) {if (!best_samplerate || abs(44100 - *p) < abs(44100 - best_samplerate))best_samplerate = *p;p++;}return best_samplerate;

}/* select layout with the highest channel count */

static int select_channel_layout(const AVCodec *codec)

{const uint64_t *p;uint64_t best_ch_layout = 0;int best_nb_channels = 0;if (!codec->channel_layouts)return AV_CH_LAYOUT_STEREO;p = codec->channel_layouts;while (*p) {int nb_channels = av_get_channel_layout_nb_channels(*p);if (nb_channels > best_nb_channels) {best_ch_layout = *p;best_nb_channels = nb_channels;}p++;}return best_ch_layout;

}unsigned char clip_value(unsigned char x, unsigned char min_val, unsigned char max_val) {if (x > max_val) {return max_val;}else if (x < min_val) {return min_val;}else {return x;}

}//RGB to YUV420

bool RGB24_TO_YUV420(unsigned char *RgbBuf, int w, int h, unsigned char *yuvBuf)

{unsigned char*ptrY, *ptrU, *ptrV, *ptrRGB;memset(yuvBuf, 0, w*h * 3 / 2);ptrY = yuvBuf;ptrU = yuvBuf + w * h;ptrV = ptrU + (w*h * 1 / 4);unsigned char y, u, v, r, g, b;for (int j = h - 1; j >= 0; j--) {ptrRGB = RgbBuf + w * j * 3;for (int i = 0; i < w; i++) {b = *(ptrRGB++);g = *(ptrRGB++);r = *(ptrRGB++);y = (unsigned char)((66 * r + 129 * g + 25 * b + 128) >> 8) + 16;u = (unsigned char)((-38 * r - 74 * g + 112 * b + 128) >> 8) + 128;v = (unsigned char)((112 * r - 94 * g - 18 * b + 128) >> 8) + 128;*(ptrY++) = clip_value(y, 0, 255);if (j % 2 == 0 && i % 2 == 0) {*(ptrU++) = clip_value(u, 0, 255);}else {if (i % 2 == 0) {*(ptrV++) = clip_value(v, 0, 255);}}}}return true;

}ULinkRecord::ULinkRecord()

{InitializeCriticalSection(&m_csVideoSection);InitializeCriticalSection(&m_csAudioInnerSection);InitializeCriticalSection(&m_csAudioInnerResampleSection);InitializeCriticalSection(&m_csAudioMicSection);InitializeCriticalSection(&m_csAudioMixSection);avdevice_register_all();

}ULinkRecord::~ULinkRecord()

{DeleteCriticalSection(&m_csVideoSection);DeleteCriticalSection(&m_csAudioInnerSection);DeleteCriticalSection(&m_csAudioInnerResampleSection);DeleteCriticalSection(&m_csAudioMicSection);DeleteCriticalSection(&m_csAudioMixSection);

}void ULinkRecord::SetRecordPath(const char* pRecordPath)

{m_strRecordPath = pRecordPath;if (!m_strRecordPath.empty()){if (m_strRecordPath[m_strRecordPath.length() - 1] != '\\'){m_strRecordPath = m_strRecordPath + "\\";}}

}void ULinkRecord::SetRecordRect(RECT rectRecord)

{m_iRecordPosX = rectRecord.left;m_iRecordPosY = rectRecord.top;m_iRecordWidth = rectRecord.right - rectRecord.left;m_iRecordHeight = rectRecord.bottom - rectRecord.top;

}int ULinkRecord::StartRecord()

{int iRet = -1;do {Clear();m_pAudioConvertCtx = swr_alloc();av_opt_set_channel_layout(m_pAudioConvertCtx, "in_channel_layout", AV_CH_LAYOUT_STEREO, 0);av_opt_set_channel_layout(m_pAudioConvertCtx, "out_channel_layout", AV_CH_LAYOUT_STEREO, 0);av_opt_set_int(m_pAudioConvertCtx, "in_sample_rate", 44100, 0);av_opt_set_int(m_pAudioConvertCtx, "out_sample_rate", 44100, 0);av_opt_set_sample_fmt(m_pAudioConvertCtx, "in_sample_fmt", AV_SAMPLE_FMT_S16, 0);//av_opt_set_sample_fmt(audio_convert_ctx, "in_sample_fmt", AV_SAMPLE_FMT_FLTP, 0);av_opt_set_sample_fmt(m_pAudioConvertCtx, "out_sample_fmt", AV_SAMPLE_FMT_FLTP, 0);iRet = swr_init(m_pAudioConvertCtx);m_pAudioInnerResampleCtx = swr_alloc();av_opt_set_channel_layout(m_pAudioInnerResampleCtx, "in_channel_layout", AV_CH_LAYOUT_STEREO, 0);av_opt_set_channel_layout(m_pAudioInnerResampleCtx, "out_channel_layout", AV_CH_LAYOUT_STEREO, 0);av_opt_set_int(m_pAudioInnerResampleCtx, "in_sample_rate", 48000, 0);av_opt_set_int(m_pAudioInnerResampleCtx, "out_sample_rate", 44100, 0);av_opt_set_sample_fmt(m_pAudioInnerResampleCtx, "in_sample_fmt", AV_SAMPLE_FMT_S16, 0);av_opt_set_sample_fmt(m_pAudioInnerResampleCtx, "out_sample_fmt", AV_SAMPLE_FMT_S16, 0);iRet = swr_init(m_pAudioInnerResampleCtx);if (OpenAudioInnerCapture() < 0){break;}if (OpenOutPut() < 0){break;}if (NULL == m_pAudioInnerResampleFifo){m_pAudioInnerResampleFifo = av_audio_fifo_alloc((AVSampleFormat)m_pFormatCtx_AudioInner->streams[0]->codecpar->format,m_pFormatCtx_AudioInner->streams[0]->codecpar->channels, 100000 * 1024);}if (NULL == m_pAudioInnerFifo){m_pAudioInnerFifo = av_audio_fifo_alloc((AVSampleFormat)m_pFormatCtx_AudioInner->streams[0]->codecpar->format,m_pFormatCtx_AudioInner->streams[0]->codecpar->channels, 3000 * 1024);}m_iYuv420FrameSize = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, m_iRecordWidth, m_iRecordHeight, 1);//申请30帧缓存m_pVideoFifo = av_fifo_alloc(30 * m_iYuv420FrameSize);m_bRecord = true;m_hAudioInnerCapture = CreateThread(NULL, 0, AudioInnerCaptureProc, this, 0, NULL);m_hAudioInnerResample = CreateThread(NULL, 0, AudioInnerResampleProc, this, 0, NULL);m_hScreenCapture = CreateThread(NULL, 0, ScreenCaptureProc, this, 0, NULL);m_hScreenAudioMix = CreateThread(NULL, 0, ScreenAudioMixProc, this, 0, NULL);iRet = 0;} while (0);if (0 == iRet){LOG_INFO("StartRecord succeed");}else{Clear();}return iRet;

}void ULinkRecord::StopRecord()

{m_bRecord = false;if (m_hAudioInnerCapture == NULL && m_hAudioInnerResample == NULL && m_hScreenCapture == NULL && m_hScreenAudioMix == NULL){return;}Sleep(1000);HANDLE hThreads[4];hThreads[0] = m_hAudioInnerCapture;hThreads[1] = m_hAudioInnerResample;hThreads[2] = m_hScreenCapture;hThreads[3] = m_hScreenAudioMix;WaitForMultipleObjects(4, hThreads, TRUE, INFINITE);CloseHandle(m_hAudioInnerCapture);m_hAudioInnerCapture = NULL;CloseHandle(m_hAudioInnerResample);m_hAudioInnerResample = NULL;if (m_hScreenCapture != NULL){CloseHandle(m_hScreenCapture);m_hScreenCapture = NULL;}if (m_hScreenAudioMix != NULL){CloseHandle(m_hScreenAudioMix);m_hScreenAudioMix = NULL;}Clear();

}void ULinkRecord::SetMute(bool bMute)

{LOG_INFO("SetMute, bMute is %d", bMute);m_bMute = bMute;

}int ULinkRecord::OpenAudioInnerCapture()

{int iRet = -1;do {//查找输入方式const AVInputFormat *pAudioInputFmt = av_find_input_format("dshow");//以Direct Show的方式打开设备,并将 输入方式 关联到格式上下文//const char * psDevName = dup_wchar_to_utf8(L"audio=麦克风 (2- Synaptics HD Audio)");char * psDevName = dup_wchar_to_utf8(L"audio=virtual-audio-capturer");if (avformat_open_input(&m_pFormatCtx_AudioInner, psDevName, pAudioInputFmt, NULL) < 0){LOG_ERROR("avformat_open_input failed, m_pFormatCtx_AudioInner");break;}if (avformat_find_stream_info(m_pFormatCtx_AudioInner, NULL) < 0){break;}if (m_pFormatCtx_AudioInner->streams[0]->codecpar->codec_type != AVMEDIA_TYPE_AUDIO){LOG_ERROR("Couldn't find audio stream information");break;}const AVCodec *tmpCodec = avcodec_find_decoder(m_pFormatCtx_AudioInner->streams[0]->codecpar->codec_id);m_pReadCodecCtx_AudioInner = avcodec_alloc_context3(tmpCodec);m_pReadCodecCtx_AudioInner->sample_rate = m_pFormatCtx_AudioInner->streams[0]->codecpar->sample_rate;m_pReadCodecCtx_AudioInner->channel_layout = select_channel_layout(tmpCodec);m_pReadCodecCtx_AudioInner->channels = av_get_channel_layout_nb_channels(m_pReadCodecCtx_AudioInner->channel_layout);m_pReadCodecCtx_AudioInner->sample_fmt = (AVSampleFormat)m_pFormatCtx_AudioInner->streams[0]->codecpar->format;//pReadCodecCtx_Audio->sample_fmt = AV_SAMPLE_FMT_FLTP;if (0 > avcodec_open2(m_pReadCodecCtx_AudioInner, tmpCodec, NULL)){LOG_ERROR("avcodec_open2 failed, m_pReadCodecCtx_AudioInner");break;}avcodec_parameters_from_context(m_pFormatCtx_AudioInner->streams[0]->codecpar, m_pReadCodecCtx_AudioInner);iRet = 0;} while (0);if (iRet != 0){if (m_pReadCodecCtx_AudioInner != NULL){avcodec_free_context(&m_pReadCodecCtx_AudioInner);m_pReadCodecCtx_AudioInner = NULL;}if (m_pFormatCtx_AudioInner != NULL){avformat_close_input(&m_pFormatCtx_AudioInner);m_pFormatCtx_AudioInner = NULL;}}return iRet;

}int ULinkRecord::OpenOutPut()

{std::string strFileName = m_strRecordPath;m_strFilePrefix = strFileName + time_tSimpleString(time(NULL));int iRet = -1;AVStream *pAudioStream = NULL;AVStream *pVideoStream = NULL;do{std::string strFileName = m_strRecordPath;strFileName += time_tSimpleString(time(NULL));strFileName += ".mp4";const char *outFileName = strFileName.c_str();avformat_alloc_output_context2(&m_pFormatCtx_Out, NULL, NULL, outFileName);{m_iVideoStreamIndex = 0;AVCodec* pCodecEncode_Video = (AVCodec *)avcodec_find_encoder(m_pFormatCtx_Out->oformat->video_codec);m_pCodecEncodeCtx_Video = avcodec_alloc_context3(pCodecEncode_Video);if (!m_pCodecEncodeCtx_Video){LOG_ERROR("avcodec_alloc_context3 failed, m_pCodecEncodeCtx_Audio");break;}pVideoStream = avformat_new_stream(m_pFormatCtx_Out, pCodecEncode_Video);if (!pVideoStream){break;}int frameRate = 10;m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_QSCALE;m_pCodecEncodeCtx_Video->bit_rate = 4000000;m_pCodecEncodeCtx_Video->rc_min_rate = 4000000;m_pCodecEncodeCtx_Video->rc_max_rate = 4000000;m_pCodecEncodeCtx_Video->bit_rate_tolerance = 4000000;m_pCodecEncodeCtx_Video->time_base.den = frameRate;m_pCodecEncodeCtx_Video->time_base.num = 1;m_pCodecEncodeCtx_Video->width = m_iRecordWidth;m_pCodecEncodeCtx_Video->height = m_iRecordHeight;//pH264Encoder->pCodecCtx->frame_number = 1;m_pCodecEncodeCtx_Video->gop_size = 12;m_pCodecEncodeCtx_Video->max_b_frames = 0;m_pCodecEncodeCtx_Video->thread_count = 4;m_pCodecEncodeCtx_Video->pix_fmt = AV_PIX_FMT_YUV420P;m_pCodecEncodeCtx_Video->codec_id = AV_CODEC_ID_H264;m_pCodecEncodeCtx_Video->codec_type = AVMEDIA_TYPE_VIDEO;av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "b-pyramid", "none", 0);av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "preset", "superfast", 0);av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "tune", "zerolatency", 0);if (m_pFormatCtx_Out->oformat->flags & AVFMT_GLOBALHEADER)m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;if (avcodec_open2(m_pCodecEncodeCtx_Video, pCodecEncode_Video, 0) < 0){//编码器打开失败,退出程序LOG_ERROR("avcodec_open2 failed, m_pCodecEncodeCtx_Audio");break;}}if (m_pFormatCtx_AudioInner->streams[0]->codecpar->codec_type == AVMEDIA_TYPE_AUDIO){pAudioStream = avformat_new_stream(m_pFormatCtx_Out, NULL);m_iAudioStreamIndex = 1;m_pCodecEncode_Audio = (AVCodec *)avcodec_find_encoder(m_pFormatCtx_Out->oformat->audio_codec);m_pCodecEncodeCtx_Audio = avcodec_alloc_context3(m_pCodecEncode_Audio);if (!m_pCodecEncodeCtx_Audio){LOG_ERROR("avcodec_alloc_context3 failed, m_pCodecEncodeCtx_Audio");break;}//pCodecEncodeCtx_Audio->codec_id = pFormatCtx_Out->oformat->audio_codec;m_pCodecEncodeCtx_Audio->sample_fmt = m_pCodecEncode_Audio->sample_fmts ? m_pCodecEncode_Audio->sample_fmts[0] : AV_SAMPLE_FMT_FLTP;m_pCodecEncodeCtx_Audio->bit_rate = 64000;m_pCodecEncodeCtx_Audio->sample_rate = 44100;m_pCodecEncodeCtx_Audio->channel_layout = AV_CH_LAYOUT_STEREO;m_pCodecEncodeCtx_Audio->channels = av_get_channel_layout_nb_channels(m_pCodecEncodeCtx_Audio->channel_layout);AVRational timeBase;timeBase.den = m_pCodecEncodeCtx_Audio->sample_rate;timeBase.num = 1;pAudioStream->time_base = timeBase;if (avcodec_open2(m_pCodecEncodeCtx_Audio, m_pCodecEncode_Audio, 0) < 0){//编码器打开失败,退出程序LOG_ERROR("avcodec_open2 failed, m_pCodecEncodeCtx_Audio");break;}}if (!(m_pFormatCtx_Out->oformat->flags & AVFMT_NOFILE)){if (avio_open(&m_pFormatCtx_Out->pb, outFileName, AVIO_FLAG_WRITE) < 0){LOG_ERROR("avio_open failed, m_pFormatCtx_Out->pb");break;}}avcodec_parameters_from_context(pVideoStream->codecpar, m_pCodecEncodeCtx_Video);avcodec_parameters_from_context(pAudioStream->codecpar, m_pCodecEncodeCtx_Audio);if (avformat_write_header(m_pFormatCtx_Out, NULL) < 0){LOG_ERROR("avio_open avformat_write_header,m_pFormatCtx_Out");break;}iRet = 0;} while (0);if (iRet != 0){if (m_pCodecEncodeCtx_Video != NULL){avcodec_free_context(&m_pCodecEncodeCtx_Video);m_pCodecEncodeCtx_Video = NULL;}if (m_pCodecEncodeCtx_Audio != NULL){avcodec_free_context(&m_pCodecEncodeCtx_Audio);m_pCodecEncodeCtx_Audio = NULL;}if (m_pFormatCtx_Out != NULL){avformat_free_context(m_pFormatCtx_Out);m_pFormatCtx_Out = NULL;}}return iRet;

}void ULinkRecord::Clear()

{if (m_pReadCodecCtx_AudioInner){avcodec_free_context(&m_pReadCodecCtx_AudioInner);m_pReadCodecCtx_AudioInner = NULL;}if (m_pReadCodecCtx_AudioMic != NULL){avcodec_free_context(&m_pReadCodecCtx_AudioMic);m_pReadCodecCtx_AudioMic = NULL;}if (m_pCodecEncodeCtx_Video != NULL){avcodec_free_context(&m_pCodecEncodeCtx_Video);m_pCodecEncodeCtx_Video = NULL;}if (m_pCodecEncodeCtx_Audio != NULL){avcodec_free_context(&m_pCodecEncodeCtx_Audio);m_pCodecEncodeCtx_Audio = NULL;}if (m_pAudioConvertCtx != NULL){swr_free(&m_pAudioConvertCtx);m_pAudioConvertCtx = NULL;}if (m_pAudioInnerResampleCtx != NULL){swr_free(&m_pAudioInnerResampleCtx);m_pAudioInnerResampleCtx = NULL;}if (m_pVideoFifo != NULL){av_fifo_free(m_pVideoFifo);m_pVideoFifo = NULL;}if (m_pAudioInnerFifo != NULL){av_audio_fifo_free(m_pAudioInnerFifo);m_pAudioInnerFifo = NULL;}if (m_pAudioInnerResampleFifo != NULL){av_audio_fifo_free(m_pAudioInnerResampleFifo);m_pAudioInnerResampleFifo = NULL;}if (m_pFormatCtx_AudioInner != NULL){avformat_close_input(&m_pFormatCtx_AudioInner);m_pFormatCtx_AudioInner = NULL;}if (m_pFormatCtx_Out != NULL){avformat_free_context(m_pFormatCtx_Out);m_pFormatCtx_Out = NULL;}if (m_hAudioInnerCapture != NULL){CloseHandle(m_hAudioInnerCapture);m_hAudioInnerCapture = NULL;}if (m_hAudioInnerResample != NULL){CloseHandle(m_hAudioInnerResample);m_hAudioInnerResample = NULL;}if (m_hScreenCapture != NULL){CloseHandle(m_hScreenCapture);m_hScreenCapture = NULL;}if (m_hScreenAudioMix != NULL){CloseHandle(m_hScreenAudioMix);m_hScreenAudioMix = NULL;}

}DWORD WINAPI ULinkRecord::AudioInnerCaptureProc(LPVOID lpParam)

{ULinkRecord *pULinkRecord = (ULinkRecord *)lpParam;if (pULinkRecord != NULL){pULinkRecord->AudioInnerCapture();}return 0;

}void ULinkRecord::AudioInnerCapture()

{AVFrame *pFrame;pFrame = av_frame_alloc();AVPacket packet = { 0 };int ret = 0;while (m_bRecord){av_packet_unref(&packet);ret = av_read_frame(m_pFormatCtx_AudioInner, &packet);if (ret < 0){//LOG_INFO("AudioInnerCapture, av_read_frame, ret is %d", ret);continue;}ret = avcodec_send_packet(m_pReadCodecCtx_AudioInner, &packet);if (ret >= 0){ret = avcodec_receive_frame(m_pReadCodecCtx_AudioInner, pFrame);if (ret == AVERROR(EAGAIN)){//LOG_INFO("AudioInnerCapture, avcodec_receive_frame, ret is %d", ret);continue;}else if (ret == AVERROR_EOF){break;}else if (ret < 0) {LOG_ERROR("avcodec_receive_frame in AudioInnerCapture error");break;}int buf_space = av_audio_fifo_space(m_pAudioInnerFifo);if (buf_space >= pFrame->nb_samples){//AudioSectionEnterCriticalSection(&m_csAudioInnerSection);ret = av_audio_fifo_write(m_pAudioInnerFifo, (void **)pFrame->data, pFrame->nb_samples);LeaveCriticalSection(&m_csAudioInnerSection);}av_packet_unref(&packet);}}LOG_INFO("AudioInnerCapture end");av_frame_free(&pFrame);

}DWORD WINAPI ULinkRecord::AudioInnerResampleProc(LPVOID lpParam)

{ULinkRecord *pULinkRecord = (ULinkRecord *)lpParam;if (pULinkRecord != NULL){pULinkRecord->AudioInnerResample();}return 0;

}void ULinkRecord::AudioInnerResample()

{int ret = 0;while (1){if (av_audio_fifo_size(m_pAudioInnerFifo) >=(m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size > 0 ? m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size : 1024)){AVFrame *frame_audio_inner = NULL;frame_audio_inner = av_frame_alloc();frame_audio_inner->nb_samples = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size > 0 ? m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size : 1024;frame_audio_inner->channel_layout = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->channel_layout;frame_audio_inner->format = m_pFormatCtx_AudioInner->streams[0]->codecpar->format;frame_audio_inner->sample_rate = m_pFormatCtx_AudioInner->streams[0]->codecpar->sample_rate;av_frame_get_buffer(frame_audio_inner, 0);EnterCriticalSection(&m_csAudioInnerSection);int readcount = av_audio_fifo_read(m_pAudioInnerFifo, (void **)frame_audio_inner->data,(m_pFormatCtx_AudioInner->streams[0]->codecpar->frame_size > 0 ? m_pFormatCtx_AudioInner->streams[0]->codecpar->frame_size : 1024));LeaveCriticalSection(&m_csAudioInnerSection);AVFrame *frame_audio_inner_resample = NULL;frame_audio_inner_resample = av_frame_alloc();//int iDelaySamples = swr_get_delay(audio_convert_ctx, frame_mic->sample_rate);int iDelaySamples = 0;//int dst_nb_samples = av_rescale_rnd(iDelaySamples + frame_mic->nb_samples, frame_mic->sample_rate, pCodecEncodeCtx_Audio->sample_rate, AVRounding(1));int dst_nb_samples = av_rescale_rnd(iDelaySamples + frame_audio_inner->nb_samples, m_pCodecEncodeCtx_Audio->sample_rate, frame_audio_inner->sample_rate, AV_ROUND_UP);frame_audio_inner_resample->nb_samples = m_pCodecEncodeCtx_Audio->frame_size;frame_audio_inner_resample->channel_layout = m_pCodecEncodeCtx_Audio->channel_layout;frame_audio_inner_resample->format = m_pFormatCtx_AudioInner->streams[0]->codecpar->format;frame_audio_inner_resample->sample_rate = m_pCodecEncodeCtx_Audio->sample_rate;av_frame_get_buffer(frame_audio_inner_resample, 0);uint8_t* out_buffer = (uint8_t*)frame_audio_inner_resample->data[0];int nb = swr_convert(m_pAudioInnerResampleCtx, &out_buffer, dst_nb_samples, (const uint8_t**)frame_audio_inner->data, frame_audio_inner->nb_samples);//if (av_audio_fifo_space(fifo_audio_resample) >= pFrame->nb_samples){EnterCriticalSection(&m_csAudioInnerResampleSection);ret = av_audio_fifo_write(m_pAudioInnerResampleFifo, (void **)frame_audio_inner_resample->data, dst_nb_samples);LeaveCriticalSection(&m_csAudioInnerResampleSection);}av_frame_free(&frame_audio_inner);av_frame_free(&frame_audio_inner_resample);if (!m_bRecord){if (av_audio_fifo_size(m_pAudioInnerFifo) < 1024){break;}}}else{Sleep(1);if (!m_bRecord){break;}}}

}DWORD WINAPI ULinkRecord::ScreenCaptureProc(LPVOID lpParam)

{ULinkRecord *pULinkRecord = (ULinkRecord *)lpParam;if (pULinkRecord != NULL){pULinkRecord->ScreenCapture();}return 0;

}void ULinkRecord::ScreenCapture()

{CCaptureScreen* ccs = new CCaptureScreen();ccs->Init(m_iRecordPosX, m_iRecordPosY, m_iRecordWidth, m_iRecordHeight);AVFrame *pFrameYUV = av_frame_alloc();pFrameYUV->format = AV_PIX_FMT_YUV420P;pFrameYUV->width = m_iRecordWidth;pFrameYUV->height = m_iRecordHeight;int y_size = m_iRecordWidth * m_iRecordHeight;int frame_size = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, m_iRecordWidth, m_iRecordHeight, 1);BYTE* out_buffer_yuv420 = new BYTE[frame_size];av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer_yuv420, AV_PIX_FMT_YUV420P, m_iRecordWidth, m_iRecordHeight, 1);AVPacket* packet = av_packet_alloc();m_iFrameNumber = 0;int ret = 0;DWORD dwBeginTime = ::GetTickCount();while(m_bRecord){BYTE* frameimage = ccs->CaptureImage();RGB24_TO_YUV420(frameimage, m_iRecordWidth, m_iRecordHeight, out_buffer_yuv420);//Sframe->pkt_dts = frame->pts = frameNumber * avCodecCtx_Out->time_base.num * avStream->time_base.den / (avCodecCtx_Out->time_base.den * avStream->time_base.num);pFrameYUV->pkt_dts = pFrameYUV->pts = av_rescale_q_rnd(m_iFrameNumber, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[m_iVideoStreamIndex]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));pFrameYUV->pkt_duration = 0;pFrameYUV->pkt_pos = -1;if (av_fifo_space(m_pVideoFifo) >= frame_size){EnterCriticalSection(&m_csVideoSection);av_fifo_generic_write(m_pVideoFifo, pFrameYUV->data[0], y_size, NULL);av_fifo_generic_write(m_pVideoFifo, pFrameYUV->data[1], y_size / 4, NULL);av_fifo_generic_write(m_pVideoFifo, pFrameYUV->data[2], y_size / 4, NULL);LeaveCriticalSection(&m_csVideoSection);m_iFrameNumber++;DWORD dwCurrentTime = ::GetTickCount();int dwPassedMillSeconds = dwCurrentTime - dwBeginTime;int dwDiff = m_iFrameNumber * 100 - dwPassedMillSeconds;if (dwDiff > 0){Sleep(dwDiff);}}}av_packet_free(&packet);av_frame_free(&pFrameYUV);delete[] out_buffer_yuv420;delete ccs;

}DWORD WINAPI ULinkRecord::ScreenAudioMixProc(LPVOID lpParam)

{ULinkRecord *pULinkRecord = (ULinkRecord *)lpParam;if (pULinkRecord != NULL){pULinkRecord->ScreenAudioMix();}return 0;

}void ULinkRecord::ScreenAudioMix()

{int ret = 0;int cur_pts_v = 0;int cur_pts_a = 0;AVFrame *pFrameYUVInMain = av_frame_alloc();uint8_t *out_buffer_yuv420 = (uint8_t *)av_malloc(m_iYuv420FrameSize);av_image_fill_arrays(pFrameYUVInMain->data, pFrameYUVInMain->linesize, out_buffer_yuv420, AV_PIX_FMT_YUV420P, m_iRecordWidth, m_iRecordHeight, 1);int AudioFrameIndex_mic = 1;AVPacket packet = { 0 };int iPicCount = 0;while (m_bRecord){if (NULL == m_pVideoFifo){continue;}/*if (av_compare_ts(cur_pts_v, m_pFormatCtx_Out->streams[m_iVideoStreamIndex]->time_base,cur_pts_a, m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->time_base) <= 0)*/{if (av_fifo_size(m_pVideoFifo) >= m_iYuv420FrameSize){EnterCriticalSection(&m_csVideoSection);av_fifo_generic_read(m_pVideoFifo, out_buffer_yuv420, m_iYuv420FrameSize, NULL);LeaveCriticalSection(&m_csVideoSection);pFrameYUVInMain->pkt_dts = pFrameYUVInMain->pts = av_rescale_q_rnd(iPicCount, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[m_iVideoStreamIndex]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));pFrameYUVInMain->pkt_duration = 0;pFrameYUVInMain->pkt_pos = -1;pFrameYUVInMain->width = m_iRecordWidth;pFrameYUVInMain->height = m_iRecordHeight;pFrameYUVInMain->format = AV_PIX_FMT_YUV420P;cur_pts_v = packet.pts;ret = avcodec_send_frame(m_pCodecEncodeCtx_Video, pFrameYUVInMain);ret = avcodec_receive_packet(m_pCodecEncodeCtx_Video, &packet);if (packet.size > 0){//av_packet_rescale_ts(packet, avCodecCtx_Out->time_base, avStream->time_base);av_write_frame(m_pFormatCtx_Out, &packet);iPicCount++;}}else{Sleep(1);}}}while(1){if (av_audio_fifo_size(m_pAudioInnerResampleFifo) >=(m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size > 0 ? m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size : 1024)){AVFrame *frame_mix = NULL;frame_mix = av_frame_alloc();frame_mix->nb_samples = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size > 0 ? m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size : 1024;frame_mix->channel_layout = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->channel_layout;//frame_mix->format = pFormatCtx_Out->streams[iAudioStreamIndex]->codecpar->format;frame_mix->format = 1;frame_mix->sample_rate = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->sample_rate;av_frame_get_buffer(frame_mix, 0);EnterCriticalSection(&m_csAudioMixSection);int readcount = av_audio_fifo_read(m_pAudioInnerResampleFifo, (void **)frame_mix->data,(m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size > 0 ? m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size : 1024));LeaveCriticalSection(&m_csAudioMixSection);AVPacket pkt_out_mic = { 0 };pkt_out_mic.data = NULL;pkt_out_mic.size = 0;frame_mix->pts = AudioFrameIndex_mic * m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size;AVFrame *frame_mic_encode = NULL;frame_mic_encode = av_frame_alloc();frame_mic_encode->nb_samples = m_pCodecEncodeCtx_Audio->frame_size;frame_mic_encode->channel_layout = m_pCodecEncodeCtx_Audio->channel_layout;frame_mic_encode->format = m_pCodecEncodeCtx_Audio->sample_fmt;frame_mic_encode->sample_rate = m_pCodecEncodeCtx_Audio->sample_rate;av_frame_get_buffer(frame_mic_encode, 0);int iDelaySamples = 0;int dst_nb_samples = av_rescale_rnd(iDelaySamples + frame_mix->nb_samples, frame_mix->sample_rate, frame_mix->sample_rate, AVRounding(1));//uint8_t *audio_buf = NULL;uint8_t *audio_buf[2] = { 0 };audio_buf[0] = (uint8_t *)frame_mic_encode->data[0];audio_buf[1] = (uint8_t *)frame_mic_encode->data[1];int nb = swr_convert(m_pAudioConvertCtx, audio_buf, dst_nb_samples, (const uint8_t**)frame_mix->data, frame_mix->nb_samples);ret = avcodec_send_frame(m_pCodecEncodeCtx_Audio, frame_mic_encode);ret = avcodec_receive_packet(m_pCodecEncodeCtx_Audio, &pkt_out_mic);if (ret == AVERROR(EAGAIN)){continue;}av_frame_free(&frame_mix);av_frame_free(&frame_mic_encode);{pkt_out_mic.stream_index = m_iAudioStreamIndex;pkt_out_mic.pts = AudioFrameIndex_mic * m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size;pkt_out_mic.dts = AudioFrameIndex_mic * m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size;pkt_out_mic.duration = m_pFormatCtx_Out->streams[m_iAudioStreamIndex]->codecpar->frame_size;cur_pts_a = pkt_out_mic.pts;av_write_frame(m_pFormatCtx_Out, &pkt_out_mic);//int ret2 = av_interleaved_write_frame(m_pFormatCtx_Out, &pkt_out_mic);av_packet_unref(&pkt_out_mic);}AudioFrameIndex_mic++;}else{break;}}Sleep(100);av_write_trailer(m_pFormatCtx_Out);avio_close(m_pFormatCtx_Out->pb);av_frame_free(&pFrameYUVInMain);

}这篇关于ffmpeg音视频同步(音视频写入顺序问题)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!