本文主要是介绍2.2.7 hadoop体系之离线计算-mapreduce分布式计算-流量统计之统计求和,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目录

1.需求分析

2.代码实现

2.1 数据展示

2.2 解决思路

2.3 代码结构

2.3.1 FlowBean

2.3.2 FlowCountMapper

2.3.3 FlowCountReduce

2.3.4 JobMain

3.运行及结果分析

3.1 准备工作

3.2 运行代码及结果展示

1.需求分析

统计求和:统计每个手机号的上行流量总和,下行流量总和,上行总流量之和,下行总流量之和

分析:以手机号码作为key值,上行流量,下行流量,上行总流量,下行总流量四个字段作为value值,然后以这个key,和value作为map阶段的输出,reduce阶段的输入

2.代码实现

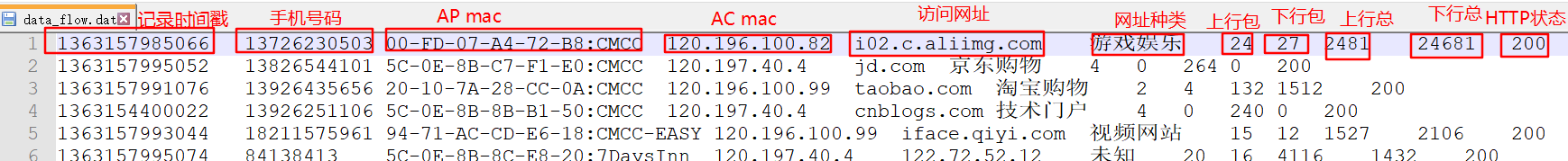

2.1 数据展示

2.2 解决思路

2.3 代码结构

2.3.1 FlowBean

package ucas.mapreduce_flowcount;import org.apache.hadoop.io.Writable;import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;public class FlowBean implements Writable {private Integer upFlow;private Integer downFlow;private Integer upCountFlow;private Integer downCountFlow;public Integer getUpFlow() {return upFlow;}public void setUpFlow(Integer upFlow) {this.upFlow = upFlow;}public Integer getDownFlow() {return downFlow;}public void setDownFlow(Integer downFlow) {this.downFlow = downFlow;}public Integer getUpCountFlow() {return upCountFlow;}public void setUpCountFlow(Integer upCountFlow) {this.upCountFlow = upCountFlow;}public Integer getDownCountFlow() {return downCountFlow;}public void setDownCountFlow(Integer downCountFlow) {this.downCountFlow = downCountFlow;}@Overridepublic String toString() {returnupFlow +"\t" + downFlow +"\t" + upCountFlow +"\t" + downCountFlow;}@Overridepublic void write(DataOutput dataOutput) throws IOException {dataOutput.writeInt(upFlow);dataOutput.writeInt(downFlow);dataOutput.writeInt(upCountFlow);dataOutput.writeInt(downCountFlow);}@Overridepublic void readFields(DataInput dataInput) throws IOException {this.upFlow = dataInput.readInt();this.downFlow = dataInput.readInt();this.upCountFlow = dataInput.readInt();this.downCountFlow = dataInput.readInt();}

}

2.3.2 FlowCountMapper

package ucas.mapreduce_flowcount;import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;import java.io.IOException;public class FlowCountMapper extends Mapper<LongWritable,Text,Text,FlowBean> {@Overrideprotected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {//1:拆分手机号String[] split = value.toString().split("\t");String phoneNum = split[1];//2:获取四个流量字段FlowBean flowBean = new FlowBean();flowBean.setUpFlow(Integer.parseInt(split[6]));flowBean.setDownFlow(Integer.parseInt(split[7]));flowBean.setUpCountFlow(Integer.parseInt(split[8]));flowBean.setDownCountFlow(Integer.parseInt(split[9]));//3:将k2和v2写入上下文中context.write(new Text(phoneNum), flowBean);}

}

2.3.3 FlowCountReduce

package ucas.mapreduce_flowcount;import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;import java.io.IOException;public class FlowCountReducer extends Reducer<Text,FlowBean,Text,FlowBean> {@Overrideprotected void reduce(Text key, Iterable<FlowBean> values, Context context) throws IOException, InterruptedException {//封装新的FlowBeanFlowBean flowBean = new FlowBean();Integer upFlow = 0;Integer downFlow = 0;Integer upCountFlow = 0;Integer downCountFlow = 0;for (FlowBean value : values) {upFlow += value.getUpFlow();downFlow += value.getDownFlow();upCountFlow += value.getUpCountFlow();downCountFlow += value.getDownCountFlow();}flowBean.setUpFlow(upFlow);flowBean.setDownFlow(downFlow);flowBean.setUpCountFlow(upCountFlow);flowBean.setDownCountFlow(downCountFlow);//将K3和V3写入上下文中context.write(key, flowBean);}

}

2.3.4 JobMain

package ucas.mapreduce_flowcount;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;public class JobMain extends Configured implements Tool {@Overridepublic int run(String[] strings) throws Exception {//创建一个任务对象Job job = Job.getInstance(super.getConf(), "mapreduce_flowcount");//打包放在集群运行时,需要做一个配置job.setJarByClass(JobMain.class);//第一步:设置读取文件的类: K1 和V1job.setInputFormatClass(TextInputFormat.class);TextInputFormat.addInputPath(job, new Path("hdfs://192.168.0.101:8020/input/flowcount"));//第二步:设置Mapper类job.setMapperClass(FlowCountMapper.class);//设置Map阶段的输出类型: k2 和V2的类型job.setMapOutputKeyClass(Text.class);job.setMapOutputValueClass(FlowBean.class);//第三,四,五,六步采用默认方式(分区,排序,规约,分组)//第七步 :设置文的Reducer类job.setReducerClass(FlowCountReducer.class);//设置Reduce阶段的输出类型job.setOutputKeyClass(Text.class);job.setOutputValueClass(FlowBean.class);//设置Reduce的个数//第八步:设置输出类job.setOutputFormatClass(TextOutputFormat.class);//设置输出的路径TextOutputFormat.setOutputPath(job, new Path("hdfs://192.168.0.101:8020/out/flowcount_out"));boolean b = job.waitForCompletion(true);return b?0:1;}public static void main(String[] args) throws Exception {Configuration configuration = new Configuration();//启动一个任务int run = ToolRunner.run(configuration, new JobMain(), args);System.exit(run);}}

3.运行及结果分析

3.1 准备工作

node01节点创建文件夹,并且上传文件,IDEA打包jar包,并且上传至 /export/software

3.2 运行代码及结果展示

运行命令:

hadoop jar day04_mapreduce_combiner-1.0-SNAPSHOT.jar ucas.mapreduce_flowcount.JobMain

运行计数器统计:

2020-10-11 00:00:04,735 INFO mapreduce.Job: map 0% reduce 0%

2020-10-11 00:00:11,866 INFO mapreduce.Job: map 100% reduce 0%

2020-10-11 00:00:18,936 INFO mapreduce.Job: map 100% reduce 100%

2020-10-11 00:00:24,066 INFO mapreduce.Job: Job job_1602327055253_0004 completed successfully

2020-10-11 00:00:24,238 INFO mapreduce.Job: Counters: 53File System CountersFILE: Number of bytes read=663FILE: Number of bytes written=432667FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0HDFS: Number of bytes read=2588HDFS: Number of bytes written=556HDFS: Number of read operations=8HDFS: Number of large read operations=0HDFS: Number of write operations=2Job Counters Launched map tasks=1Launched reduce tasks=1Data-local map tasks=1Total time spent by all maps in occupied slots (ms)=5093Total time spent by all reduces in occupied slots (ms)=4175Total time spent by all map tasks (ms)=5093Total time spent by all reduce tasks (ms)=4175Total vcore-milliseconds taken by all map tasks=5093Total vcore-milliseconds taken by all reduce tasks=4175Total megabyte-milliseconds taken by all map tasks=5215232Total megabyte-milliseconds taken by all reduce tasks=4275200Map-Reduce FrameworkMap input records=22Map output records=22Map output bytes=613Map output materialized bytes=663Input split bytes=120Combine input records=0Combine output records=0Reduce input groups=21Reduce shuffle bytes=663Reduce input records=22Reduce output records=21Spilled Records=44Shuffled Maps =1Failed Shuffles=0Merged Map outputs=1GC time elapsed (ms)=170CPU time spent (ms)=2360Physical memory (bytes) snapshot=478408704Virtual memory (bytes) snapshot=4846075904Total committed heap usage (bytes)=303030272Peak Map Physical memory (bytes)=371359744Peak Map Virtual memory (bytes)=2409140224Peak Reduce Physical memory (bytes)=107048960Peak Reduce Virtual memory (bytes)=2436935680Shuffle ErrorsBAD_ID=0CONNECTION=0IO_ERROR=0WRONG_LENGTH=0WRONG_MAP=0WRONG_REDUCE=0File Input Format Counters Bytes Read=2468File Output Format Counters Bytes Written=556

运行结果展示:

这篇关于2.2.7 hadoop体系之离线计算-mapreduce分布式计算-流量统计之统计求和的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!