本文主要是介绍karto算法论文阅读及代码讲解-扫描匹配(correlative scan matching,csm),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

扫描匹配算法csm,correlative scan matching

- 1.csm理论基础-位姿得分

- 2.csm在karto算法中实现前端匹配

- 2.1 前端匹配整体框架

- 2.2 使用滑动窗口法来生成局部地图

- 1) AddScans()

- 2)FindValidPoints 函数

- 3) SmearPoint()

- 2.2 多分辨率概率查询表

- 1)多分辨率概率查找表在karto中的使用

- 2) CorrelateScan 扫描匹配的实际处理函数

- (1)ComputeOffsets 生成角度查找表

- (2)计算角度协方差和位置协方差

- i.void ScanMatcher::ComputePositionalCovariance() 位置协方差计算

- ii.void ScanMatcher::ComputeAngularCovariance() 角度协方差计算

这篇博客首先对csm中位姿得分进行介绍,接着基于karto算法对csm进行整体介绍,同时介绍其中csm的各个要点。

1.csm理论基础-位姿得分

帧间匹配可以理解成一个:已知x𝑖-1 和m,也测量到了u 和 z𝑖 ,如何尽可能准确的求出x𝑖 ?

应用高斯分布,p(x i|x i-1,u,m,z i)=p(z|x i,m) p(x i|x i-1,u) 。其中p(x i|x i-1,u)是我们熟悉的运动模型,p(z|x i,m) 是观测模型。前者容易求解;后者的计算是一个难题,容易陷入局部最优解。

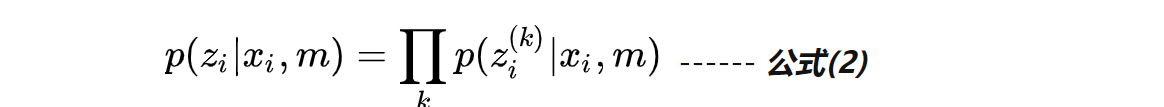

假设:第i次观测Z i中的各个激光点(以 z i k表示)位置的概率分布是彼此独立的,则:

对上述公式两侧取对数,让乘法变加法,我们有:

对上述公式两侧取对数,让乘法变加法,我们有:

公式(3)是我们的观测模型,我们通过下面的思路对其进行求解:

公式(3)是我们的观测模型,我们通过下面的思路对其进行求解:

- 根据 前面的观测 m(或若干帧)建立出来的概率栅格地图!

- 按照位姿xi把当前观测 zi投影到 m 中,把所有被击中的栅格的概率值相加,就是!在栅格地图的基础上,公式(3) 的求解。 所得的 即是位姿 的得分,代表着在这个位姿下,当前观测 与已知环境 相一致的程度(i.e., 相关性)。得分越高,表明这个位姿越靠谱。

通过上面的介绍,我们可以总结出CSM的主要思想:

CSM帧匹配算法基于概率栅格地图运行,每个栅格都维护一个对数形式的占据概率;对于新进来的激光scan,将scan中所有点通过一个预估位姿投影到栅格地图上,这样每个激光点都会落到一个栅格中,激光点所在栅格的对数概率值之和既为当前位姿的得分,代表着这个位姿的可信度。但是预估位姿是不准确的,我们在预估位姿附近建立一个搜索空间,通过分支定界策略加速搜索,求出得分最高的备选位姿,作为最优结果输出 。

2.csm在karto算法中实现前端匹配

这个章节对csm在karto中的实现进行介绍。首先给出karto中帧间匹配算法的主要步骤:

1)获取先验位姿,通过TF获取里程计的值,作为当前scan的预测位姿,将这个预测位姿当做扫描匹配的先验。

2)使用滑动窗口法来生成局部地图;Karto使用了一个队列保存最近24米范围内的所有雷达数据,通过将滑动窗口内的所有雷达数据写成分辨率为0.01m的栅格地图,这样就生成了局部地图。

3)通过暴力求解的方式,遍历一定范围内所有的平移与旋转的位姿,选出得分最大的一个位姿。(扫描匹配)加速方式:

- 生成角度查找表,实现暴力求解内部的两个循环(对于xˆ和yˆ)只是转换查询点。

- 多分辨率概率查询表,先粗匹配计算位置协方差,输出坐标均值,再次进行精匹配,计算角度协方差。

4)位姿的得分:位姿的得分是通过雷达点对应于栅格地图中的格子的占用值来确定的,所有雷达点对应格子的占用值的总和除以点的个数乘以100。

5)对距离先验位姿远的位姿进行惩罚

6)保存位姿与当前scan

然后对其实现代码进行讲解。

2.1 前端匹配整体框架

MatchScan函数主要完成当前激光pScan与rBaseScans进行扫描匹配,先在预估位姿投影到栅格地图上,计算当前位姿的得分,代表着这个位姿的可信度。由于预估位姿是不准确的,然后在预估位姿附近建立一个搜索空间,通过分支定界策略加速搜索,求出得分最高的备选位姿,作为最优结果输出 。

/**//pScan与rBaseScans进行扫描匹配,打到最优位置rMean和对应协方差。* Match given scan against set of scans* @param pScan scan being scan-matched* @param rBaseScans set of scans whose points will mark cells in grid as being occupied* @param rMean output parameter of mean (best pose) of match* @param rCovariance output parameter of covariance of match* @param doPenalize whether to penalize matches further from the search center* @param doRefineMatch whether to do finer-grained matching if coarse match is good (default is true)* @return strength of response*/

kt_double ScanMatcher::MatchScan(LocalizedRangeScan *pScan, //当前帧的scan数据const LocalizedRangeScanVector &rBaseScans, //已经接收的scan的数组 mapPose2 &rMean,Matrix3 &rCovariance,kt_bool doPenalize,kt_bool doRefineMatch)

{///// set scan pose to be center of grid// 1. get scan position ,获取当前激光雷达的位置Pose2 scanPose = pScan->GetSensorPose();// scan has no readings; cannot do scan matching// best guess of pose is based off of adjusted odometer readingif (pScan->GetNumberOfRangeReadings() == 0){rMean = scanPose;// maximum covariancerCovariance(0, 0) = MAX_VARIANCE; // XXrCovariance(1, 1) = MAX_VARIANCE; // YYrCovariance(2, 2) = 4 * math::Square(m_pMapper->m_pCoarseAngleResolution->GetValue()); // TH*THreturn 0.0;}// 2. get size of grid 网格:24m*24m,0.01,2431*2431个格子Rectangle2<kt_int32s> roi = m_pCorrelationGrid->GetROI();// 3. compute offset (in meters - lower left corner)Vector2<kt_double> offset;offset.SetX(scanPose.GetX() - (0.5 * (roi.GetWidth() - 1) * m_pCorrelationGrid->GetResolution()));offset.SetY(scanPose.GetY() - (0.5 * (roi.GetHeight() - 1) * m_pCorrelationGrid->GetResolution()));// 4. set offsetm_pCorrelationGrid->GetCoordinateConverter()->SetOffset(offset);///// set up correlation grid,使用已经接收到的激光数据rBaseScans,生成局部地图AddScans(rBaseScans, scanPose.GetPosition());// compute how far to search in each direction// m_pSearchSpaceProbs 是一个 31 * 31 大小的栅格地图,0. 01,XY=0.15Vector2<kt_double> searchDimensions(m_pSearchSpaceProbs->GetWidth(), m_pSearchSpaceProbs->GetHeight());Vector2<kt_double> coarseSearchOffset(0.5 * (searchDimensions.GetX() - 1) * m_pCorrelationGrid->GetResolution(),0.5 * (searchDimensions.GetY() - 1) * m_pCorrelationGrid->GetResolution());// a coarse search only checks half the cells in each dimensionVector2<kt_double> coarseSearchResolution(2 * m_pCorrelationGrid->GetResolution(), //0.022 * m_pCorrelationGrid->GetResolution()); //0.02// actual scan-matching scan to map的过程kt_double bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution, //0.02m_pMapper->m_pCoarseSearchAngleOffset->GetValue(), //0.349 m_pMapper->m_pCoarseAngleResolution->GetValue(), //0.0349 doPenalize, rMean, rCovariance, false);if (m_pMapper->m_pUseResponseExpansion->GetValue() == true){if (math::DoubleEqual(bestResponse, 0.0)){

#ifdef KARTO_DEBUGstd::cout << "Mapper Info: Expanding response search space!" << std::endl;

#endif// try and increase search angle offset with 20 degrees and do another matchkt_double newSearchAngleOffset = m_pMapper->m_pCoarseSearchAngleOffset->GetValue();for (kt_int32u i = 0; i < 3; i++){newSearchAngleOffset += math::DegreesToRadians(20);bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution,newSearchAngleOffset, m_pMapper->m_pCoarseAngleResolution->GetValue(),doPenalize, rMean, rCovariance, false);if (math::DoubleEqual(bestResponse, 0.0) == false){break;}}#ifdef KARTO_DEBUGif (math::DoubleEqual(bestResponse, 0.0)){std::cout << "Mapper Warning: Unable to calculate response!" << std::endl;}

#endif}}if (doRefineMatch){Vector2<kt_double> fineSearchOffset(coarseSearchResolution * 0.5);Vector2<kt_double> fineSearchResolution(m_pCorrelationGrid->GetResolution(), m_pCorrelationGrid->GetResolution());bestResponse = CorrelateScan(pScan, rMean, fineSearchOffset, fineSearchResolution,0.5 * m_pMapper->m_pCoarseAngleResolution->GetValue(),m_pMapper->m_pFineSearchAngleOffset->GetValue(),doPenalize, rMean, rCovariance, true);}#ifdef KARTO_DEBUGstd::cout << " BEST POSE = " << rMean << " BEST RESPONSE = " << bestResponse << ", VARIANCE = "<< rCovariance(0, 0) << ", " << rCovariance(1, 1) << std::endl;

#endifassert(math::InRange(rMean.GetHeading(), -KT_PI, KT_PI));return bestResponse;

}

2.2 使用滑动窗口法来生成局部地图

Karto使用了一个队列保存最近24米范围内的所有雷达数据,通过将滑动窗口内的所有雷达数据写成分辨率为0.01的栅格地图,这样就生成了局部地图。

1) AddScans()

首先将 m_pCorrelationGrid 中栅格全置为 0 ;

然后遍历 running scan , 将每一帧雷达数据进行 AddScan();

确定是否是有效的点,首先判断距离大于0.01,再判断是否是同方向的,应该是按照右手定则判断的,直接按公式求cos得正负;

然后将这些点对应的栅格地图中的点 不是占用状态的 设置成 占用状态,同时,更新当前坐标点周围的占用值, SmearPoint(gridPoint)

/**//遍历runningScan中的第一帧激光雷达,将其中的激光点投影到栅格地图中* Marks cells where scans' points hit as being occupied* @param rScans scans whose points will mark cells in grid as being occupied* @param viewPoint do not add points that belong to scans "opposite" the view point*/

void ScanMatcher::AddScans(const LocalizedRangeScanVector &rScans, Vector2<kt_double> viewPoint)

{m_pCorrelationGrid->Clear();// add all scans to gridconst_forEach(LocalizedRangeScanVector, &rScans){AddScan(*iter, viewPoint);}

}/*** Marks cells where scans' points hit as being occupied. Can smear points as they are added.* @param pScan scan whose points will mark cells in grid as being occupied* @param viewPoint do not add points that belong to scans "opposite" the view point* @param doSmear whether the points will be smeared*/

void ScanMatcher::AddScan(LocalizedRangeScan *pScan, const Vector2<kt_double> &rViewPoint, kt_bool doSmear)

{PointVectorDouble validPoints = FindValidPoints(pScan, rViewPoint);// put in all valid pointsconst_forEach(PointVectorDouble, &validPoints){Vector2<kt_int32s> gridPoint = m_pCorrelationGrid->WorldToGrid(*iter);if (!math::IsUpTo(gridPoint.GetX(), m_pCorrelationGrid->GetROI().GetWidth()) ||!math::IsUpTo(gridPoint.GetY(), m_pCorrelationGrid->GetROI().GetHeight())){// point not in gridcontinue;}int gridIndex = m_pCorrelationGrid->GridIndex(gridPoint);// set grid cell as occupiedif (m_pCorrelationGrid->GetDataPointer()[gridIndex] == GridStates_Occupied){// value already setcontinue;}m_pCorrelationGrid->GetDataPointer()[gridIndex] = GridStates_Occupied;// smear gridif (doSmear == true){m_pCorrelationGrid->SmearPoint(gridPoint);}}

}

2)FindValidPoints 函数

/*** Compute which points in a scan are on the same side as the given viewpoint* @param pScan* @param rViewPoint* @return points on the same side*/

PointVectorDouble ScanMatcher::FindValidPoints(LocalizedRangeScan *pScan, const Vector2<kt_double> &rViewPoint) const

{const PointVectorDouble &rPointReadings = pScan->GetPointReadings();// points must be at least 10 cm away when making comparisons of inside/outside of viewpointconst kt_double minSquareDistance = math::Square(0.1); // in m^2// this iterator lags from the main iterator adding points only when the points are on// the same side as the viewpointPointVectorDouble::const_iterator trailingPointIter = rPointReadings.begin();PointVectorDouble validPoints;Vector2<kt_double> firstPoint;kt_bool firstTime = true;const_forEach(PointVectorDouble, &rPointReadings){Vector2<kt_double> currentPoint = *iter;if (firstTime && !std::isnan(currentPoint.GetX()) && !std::isnan(currentPoint.GetY())){firstPoint = currentPoint;firstTime = false;}Vector2<kt_double> delta = firstPoint - currentPoint;if (delta.SquaredLength() > minSquareDistance){ // This compute the Determinant (viewPoint FirstPoint, viewPoint currentPoint)// Which computes the direction of rotation, if the rotation is counterclock// wise then we are looking at data we should keep. If it's negative rotation// we should not included in in the matching// have enough distance, check viewpointdouble a = rViewPoint.GetY() - firstPoint.GetY();double b = firstPoint.GetX() - rViewPoint.GetX();double c = firstPoint.GetY() * rViewPoint.GetX() - firstPoint.GetX() * rViewPoint.GetY();double ss = currentPoint.GetX() * a + currentPoint.GetY() * b + c;// reset beginning pointfirstPoint = currentPoint;if (ss < 0.0) // wrong side, skip and keep going{trailingPointIter = iter;}else{for (; trailingPointIter != iter; ++trailingPointIter){validPoints.push_back(*trailingPointIter);}}}}return validPoints;

}

3) SmearPoint()

大致上感觉是:通过一个 6 * 6 的核函数,将当前坐标点附近 6*6 的点 做卷积,如果附近点的占用值小于核函数中的占用值,则将 核函数中的占用值 赋值给 附近点的占用值。

/*** Smear cell if the cell at the given point is marked as "occupied"* @param rGridPoint*/inline void SmearPoint(const Vector2<kt_int32s> &rGridPoint){assert(m_pKernel != NULL);int gridIndex = GridIndex(rGridPoint);if (GetDataPointer()[gridIndex] != GridStates_Occupied){return;}kt_int32s halfKernel = m_KernelSize / 2;// apply kernelfor (kt_int32s j = -halfKernel; j <= halfKernel; j++){kt_int8u *pGridAdr = GetDataPointer(Vector2<kt_int32s>(rGridPoint.GetX(), rGridPoint.GetY() + j));kt_int32s kernelConstant = (halfKernel) + m_KernelSize * (j + halfKernel);// if a point is on the edge of the grid, there is no problem// with running over the edge of allowable memory, because// the grid has margins to compensate for the kernel sizefor (kt_int32s i = -halfKernel; i <= halfKernel; i++){kt_int32s kernelArrayIndex = i + kernelConstant;kt_int8u kernelValue = m_pKernel[kernelArrayIndex];if (kernelValue > pGridAdr[i]){// kernel value is greater, so set it to kernel valuepGridAdr[i] = kernelValue;}}}}

2.2 多分辨率概率查询表

低分辨率与高分辨率栅格的定义,如图3所示:

- 高分辨率m,按照某个分辨率(如:0.01)把世界栅格化;

- 低分辨率栅格m’,将分辨率高低(比如一倍,0.005),构建新的栅格地图。

可以看到, m’(红色)中的1个栅格覆盖了 m(黑色)中的4个栅格, 我们把4个黑色栅格概率值的最大值赋给对应的红色栅格!。

暴力搜索时,我们需要遍历三个变量:角度,横向平移,纵向平移。现在我们把角度放在最外层,内层就只有两个平移,这两个平移正是我们加速的对象。

搜索空间定义:我们记 xi 在 m中的搜索空间为 W,在m’中同等物理尺度的搜索空间为 W’ 。 W 与 W’的物理空间大小是一样的,但元素的数量却不一样!举个栗子,我们设定搜索空间的物理大小为预估位姿附近〔旋转 ±10 度、横向 ±1米、纵向 ±1 米〕的空间,这里只考虑平移。假定m的分辨率是10cm,则 W中元素的个数是 100个; W’ 的分辨率是20cm,则 W’ 中元素的个数是25个。

加速搜索实现,设置低分辨率栅格的得分是对应所有高分辨率栅格得分的上界;

加速搜索做法:

加速搜索做法:

加速的思想很简单:低分辨率栅格的得分是对应所有高分辨率栅格得分的上界,如果某个低分辨率栅格的得分低于 Hbest,则其对应的所有高分辨率栅格的得分必然也不会高于 Hbest,我们索性不再考虑这部分高分辨率栅格,这个过程,也叫做「剪枝」!我们正是通过剪掉不必考虑的分支,来实现加速的。

加速的思想很简单:低分辨率栅格的得分是对应所有高分辨率栅格得分的上界,如果某个低分辨率栅格的得分低于 Hbest,则其对应的所有高分辨率栅格的得分必然也不会高于 Hbest,我们索性不再考虑这部分高分辨率栅格,这个过程,也叫做「剪枝」!我们正是通过剪掉不必考虑的分支,来实现加速的。

CSM帧匹配算法「分支定界」加速的完整示意图如下所示~

最外层遍历每一个角度,对各个角度下的平移空间进行「分支定界」加速搜索,获得最高得分;再对比各个角度的最高得分,获得整个搜索空间内的全局最高得分,该得分对应的那个位姿就是我们对 [公式] 的最准确估计(i.e., 全局最优解)

1)多分辨率概率查找表在karto中的使用

先粗匹配计算位置协方差,输出坐标均值,再次进行精匹配,计算角度协方差。

/*** Match given scan against set of scans* @param pScan scan being scan-matched* @param rBaseScans set of scans whose points will mark cells in grid as being occupied* @param rMean output parameter of mean (best pose) of match* @param rCovariance output parameter of covariance of match* @param doPenalize whether to penalize matches further from the search center* @param doRefineMatch whether to do finer-grained matching if coarse match is good (default is true)* @return strength of response*/

kt_double ScanMatcher::MatchScan(LocalizedRangeScan *pScan, //当前帧的scan数据const LocalizedRangeScanVector &rBaseScans, //已经接收的scan的数组 mapPose2 &rMean,Matrix3 &rCovariance,kt_bool doPenalize,kt_bool doRefineMatch)

{// ...省略//低分辨率层// compute how far to search in each direction// m_pSearchSpaceProbs 是一个 31 * 31 大小的栅格地图,0. 01,XY=0.15Vector2<kt_double> searchDimensions(m_pSearchSpaceProbs->GetWidth(), m_pSearchSpaceProbs->GetHeight());Vector2<kt_double> coarseSearchOffset(0.5 * (searchDimensions.GetX() - 1) * m_pCorrelationGrid->GetResolution(),0.5 * (searchDimensions.GetY() - 1) * m_pCorrelationGrid->GetResolution());// a coarse search only checks half the cells in each dimensionVector2<kt_double> coarseSearchResolution(2 * m_pCorrelationGrid->GetResolution(), //0.022 * m_pCorrelationGrid->GetResolution()); //0.02// actual scan-matching scan to map的过程kt_double bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution, //0.02m_pMapper->m_pCoarseSearchAngleOffset->GetValue(), //0.349 m_pMapper->m_pCoarseAngleResolution->GetValue(), //0.0349 doPenalize, rMean, rCovariance, false);// ...省略//高分辨率层if (doRefineMatch){Vector2<kt_double> fineSearchOffset(coarseSearchResolution * 0.5);Vector2<kt_double> fineSearchResolution(m_pCorrelationGrid->GetResolution(), m_pCorrelationGrid->GetResolution());bestResponse = CorrelateScan(pScan, rMean, fineSearchOffset, fineSearchResolution,0.5 * m_pMapper->m_pCoarseAngleResolution->GetValue(),m_pMapper->m_pFineSearchAngleOffset->GetValue(),doPenalize, rMean, rCovariance, true);}#ifdef KARTO_DEBUGstd::cout << " BEST POSE = " << rMean << " BEST RESPONSE = " << bestResponse << ", VARIANCE = "<< rCovariance(0, 0) << ", " << rCovariance(1, 1) << std::endl;

#endifassert(math::InRange(rMean.GetHeading(), -KT_PI, KT_PI));return bestResponse;

}

2) CorrelateScan 扫描匹配的实际处理函数

扫描匹配的实际处理函数,其步骤包括:

- 制作角度查找表 ;

- 根据搜索空间和搜索分辨率求得搜索空间的个数,分别为x y angle 的个数;

- 遍历搜索空间,求得所有搜索项的响应值

- 在 所有搜索项的响应值 中寻找最大的响应值

- 最大的响应值(同为最大)对应的位姿可能有很多种,求得 x y angle 的平均值

- 保存最优位姿及其响应值 ;

/*** Finds the best pose for the scan centering the search in the correlation grid* at the given pose and search in the space by the vector and angular offsets* in increments of the given resolutions* @param rScan scan to match against correlation grid* @param rSearchCenter the center of the search space* @param rSearchSpaceOffset searches poses in the area offset by this vector around search center* @param rSearchSpaceResolution how fine a granularity to search in the search space* @param searchAngleOffset searches poses in the angles offset by this angle around search center* @param searchAngleResolution how fine a granularity to search in the angular search space* @param doPenalize whether to penalize matches further from the search center* @param rMean output parameter of mean (best pose) of match* @param rCovariance output parameter of covariance of match* @param doingFineMatch whether to do a finer search after coarse search* @return strength of response*/

kt_double ScanMatcher::CorrelateScan(LocalizedRangeScan *pScan,const Pose2 &rSearchCenter, //机器人位置const Vector2<kt_double> &rSearchSpaceOffset, //0.15mconst Vector2<kt_double> &rSearchSpaceResolution, //0.01mkt_double searchAngleOffset, //0.349kt_double searchAngleResolution, //0.0349kt_bool doPenalize,Pose2 &rMean, Matrix3 &rCovariance,kt_bool doingFineMatch) //false

{assert(searchAngleResolution != 0.0);// 1. 制作角度查找表 // setup lookup arrays coarse_search_angle_offset: 0.349 coarse_angle_resolution: 0.0349m_pGridLookup->ComputeOffsets(pScan, rSearchCenter.GetHeading(), searchAngleOffset, searchAngleResolution);// only initialize probability grid if computing positional covariance (during coarse match)if (!doingFineMatch){m_pSearchSpaceProbs->Clear();// position search grid - finds lower left corner of search gridVector2<kt_double> offset(rSearchCenter.GetPosition() - rSearchSpaceOffset);m_pSearchSpaceProbs->GetCoordinateConverter()->SetOffset(offset);}// 2. 求得搜索空间的个数,分别为x y angle 的个数//以下计算出需要的各种x,y,angle用于三重循环 ,在这里x有16个值,y有16个值,angle有21个值std::vector<kt_double> xPoses;kt_int32u nX = static_cast<kt_int32u>(math::Round(rSearchSpaceOffset.GetX() * //0.152.0 / rSearchSpaceResolution.GetX()) + //0.021);kt_double startX = -rSearchSpaceOffset.GetX(); //0.15for (kt_int32u xIndex = 0; xIndex < nX; xIndex++){xPoses.push_back(startX + xIndex * rSearchSpaceResolution.GetX());}assert(math::DoubleEqual(xPoses.back(), -startX));std::vector<kt_double> yPoses;kt_int32u nY = static_cast<kt_int32u>(math::Round(rSearchSpaceOffset.GetY() *2.0 / rSearchSpaceResolution.GetY()) +1);kt_double startY = -rSearchSpaceOffset.GetY();for (kt_int32u yIndex = 0; yIndex < nY; yIndex++){yPoses.push_back(startY + yIndex * rSearchSpaceResolution.GetY());}assert(math::DoubleEqual(yPoses.back(), -startY));// calculate pose response array size 0.349 * 2.0 / 0.0349 + 1kt_int32u nAngles = static_cast<kt_int32u>(math::Round(searchAngleOffset * 2.0 / searchAngleResolution) + 1);// 16 16 21kt_int32u poseResponseSize = static_cast<kt_int32u>(xPoses.size() * yPoses.size() * nAngles);// allocate arraystd::pair<kt_double, Pose2> *pPoseResponse = new std::pair<kt_double, Pose2>[poseResponseSize];Vector2<kt_int32s> startGridPoint = m_pCorrelationGrid->WorldToGrid(Vector2<kt_double>(rSearchCenter.GetX() + startX, rSearchCenter.GetY() + startY));// 3. 遍历搜索空间,求得所有搜索项的响应值 16 * 16 * 21kt_int32u poseResponseCounter = 0;forEachAs(std::vector<kt_double>, &yPoses, yIter){kt_double y = *yIter;kt_double newPositionY = rSearchCenter.GetY() + y;kt_double squareY = math::Square(y);forEachAs(std::vector<kt_double>, &xPoses, xIter){kt_double x = *xIter;kt_double newPositionX = rSearchCenter.GetX() + x;kt_double squareX = math::Square(x);Vector2<kt_int32s> gridPoint = m_pCorrelationGrid->WorldToGrid(Vector2<kt_double>(newPositionX, newPositionY));kt_int32s gridIndex = m_pCorrelationGrid->GridIndex(gridPoint);assert(gridIndex >= 0);kt_double angle = 0.0;kt_double startAngle = rSearchCenter.GetHeading() - searchAngleOffset;for (kt_int32u angleIndex = 0; angleIndex < nAngles; angleIndex++){angle = startAngle + angleIndex * searchAngleResolution;// 由 angleIndex 和 gridIndex得出response // 将旋转平移之后的这一帧数据和上一帧的数据进行匹配得分kt_double response = GetResponse(angleIndex, gridIndex);if (doPenalize && (math::DoubleEqual(response, 0.0) == false)){// simple model (approximate Gaussian) to take odometry into accountkt_double squaredDistance = squareX + squareY;kt_double distancePenalty = 1.0 - (DISTANCE_PENALTY_GAIN *squaredDistance / m_pMapper->m_pDistanceVariancePenalty->GetValue());distancePenalty = math::Maximum(distancePenalty, m_pMapper->m_pMinimumDistancePenalty->GetValue());kt_double squaredAngleDistance = math::Square(angle - rSearchCenter.GetHeading());kt_double anglePenalty = 1.0 - (ANGLE_PENALTY_GAIN *squaredAngleDistance / m_pMapper->m_pAngleVariancePenalty->GetValue());anglePenalty = math::Maximum(anglePenalty, m_pMapper->m_pMinimumAnglePenalty->GetValue());response *= (distancePenalty * anglePenalty);}// store response and posepPoseResponse[poseResponseCounter] = std::pair<kt_double, Pose2>(response, Pose2(newPositionX, newPositionY,math::NormalizeAngle(angle)));poseResponseCounter++;}assert(math::DoubleEqual(angle, rSearchCenter.GetHeading() + searchAngleOffset));}}assert(poseResponseSize == poseResponseCounter);// 4. 在 所有搜索项的响应值 中寻找最大的响应值// find value of best response (in [0; 1])kt_double bestResponse = -1;for (kt_int32u i = 0; i < poseResponseSize; i++){bestResponse = math::Maximum(bestResponse, pPoseResponse[i].first);// will compute positional covariance, save best relative probability for each cellif (!doingFineMatch){const Pose2 &rPose = pPoseResponse[i].second;Vector2<kt_int32s> grid = m_pSearchSpaceProbs->WorldToGrid(rPose.GetPosition());// Changed (kt_double*) to the reinterpret_cast - Luckt_double *ptr = reinterpret_cast<kt_double *>(m_pSearchSpaceProbs->GetDataPointer(grid));if (ptr == NULL){throw std::runtime_error("Mapper FATAL ERROR - Index out of range in probability search!");}*ptr = math::Maximum(pPoseResponse[i].first, *ptr);}}// 5. 最大的响应值(同为最大)对应的位姿可能有很多种,求得 x y angle 的平均值 // average all poses with same highest responseVector2<kt_double> averagePosition;kt_double thetaX = 0.0;kt_double thetaY = 0.0;kt_int32s averagePoseCount = 0;for (kt_int32u i = 0; i < poseResponseSize; i++) //16 * 16 * 21{if (math::DoubleEqual(pPoseResponse[i].first, bestResponse)){averagePosition += pPoseResponse[i].second.GetPosition();kt_double heading = pPoseResponse[i].second.GetHeading();thetaX += cos(heading);thetaY += sin(heading);averagePoseCount++;}}Pose2 averagePose;if (averagePoseCount > 0){averagePosition /= averagePoseCount;thetaX /= averagePoseCount;thetaY /= averagePoseCount;averagePose = Pose2(averagePosition, atan2(thetaY, thetaX));}else{throw std::runtime_error("Mapper FATAL ERROR - Unable to find best position");}// delete pose response arraydelete[] pPoseResponse;#ifdef KARTO_DEBUGstd::cout << "bestPose: " << averagePose << std::endl;std::cout << "bestResponse: " << bestResponse << std::endl;

#endifif (!doingFineMatch){ComputePositionalCovariance(averagePose, bestResponse, rSearchCenter, rSearchSpaceOffset,rSearchSpaceResolution, searchAngleResolution, rCovariance);}else{ComputeAngularCovariance(averagePose, bestResponse, rSearchCenter,searchAngleOffset, searchAngleResolution, rCovariance);}

// 6.保存最优位姿及其响应值 ; rMean = averagePose;#ifdef KARTO_DEBUGstd::cout << "bestPose: " << averagePose << std::endl;

#endifif (bestResponse > 1.0){bestResponse = 1.0;}assert(math::InRange(bestResponse, 0.0, 1.0));assert(math::InRange(rMean.GetHeading(), -KT_PI, KT_PI));return bestResponse;

}

(1)ComputeOffsets 生成角度查找表

将当前scan按照不同角度进行旋转,每个旋转角度对应一个角度查找表。一个角度查找表中存储的是当前scan的每一个数据点 经过旋转后对应着栅格地图中格子的索引值,一个角度查找表存储了当前scan经过旋转后的索引值,这里并不是真正的将scan进行旋转了,只是记录下旋转后的对应的格子的索引,用于求取响应值。

/** coarse_search_angle_offset: 0.349 coarse_angle_resolution: 0.0349* Compute lookup table of the points of the given scan for the given angular space* @param pScan the scan* @param angleCenter* @param angleOffset computes lookup arrays for the angles within this offset around angleStart* @param angleResolution how fine a granularity to compute lookup arrays in the angular space*/void ComputeOffsets(LocalizedRangeScan *pScan,kt_double angleCenter,kt_double angleOffset, //0.349 kt_double angleResolution) //0.0349 {assert(angleOffset != 0.0);assert(angleResolution != 0.0);//nAngles 为21kt_int32u nAngles = static_cast<kt_int32u>(math::Round(angleOffset * 2.0 / angleResolution) + 1);SetSize(nAngles);//// convert points into local coordinates of scan poseconst PointVectorDouble &rPointReadings = pScan->GetPointReadings();// compute transform to scan poseTransform transform(pScan->GetSensorPose());Pose2Vector localPoints; localPoints的所有数据的角度是 0.0const_forEach(PointVectorDouble, &rPointReadings){// do inverse transform to get points in local coordinates 进行逆变换以获取局部坐标中的点Pose2 vec = transform.InverseTransformPose(Pose2(*iter, 0.0));localPoints.push_back(vec);}//// create lookup array for different angleskt_double angle = 0.0;kt_double startAngle = angleCenter - angleOffset; for (kt_int32u angleIndex = 0; angleIndex < nAngles; angleIndex++){angle = startAngle + angleIndex * angleResolution; ComputeOffsets(angleIndex, angle, localPoints, pScan);}// assert(math::DoubleEqual(angle, angleCenter + angleOffset));}/*** Compute lookup value of points for given angle* @param angleIndex* @param angle* @param rLocalPoints*/void ComputeOffsets(kt_int32u angleIndex, kt_double angle, const Pose2Vector &rLocalPoints, LocalizedRangeScan *pScan){//m_ppLookupArray 是个2维指针,存储不同角度下的雷达数据m_ppLookupArray[angleIndex]->SetSize(static_cast<kt_int32u>(rLocalPoints.size()));m_Angles.at(angleIndex) = angle; // m_Angles 一维数组,只保存当前scan的角度// set up point array by computing relative offsets to points readings// when rotated by given angleconst Vector2<kt_double> &rGridOffset = m_pGrid->GetCoordinateConverter()->GetOffset();kt_double cosine = cos(angle);kt_double sine = sin(angle);kt_int32u readingIndex = 0;// 第i个角度的所有扫描数据的指针,每一个扫描数据又是一个uint数组,// 这个uint数组的大小是LookupArray的m_Capacity, m_Capacity 的大小是rLocalPoints.size()kt_int32s *pAngleIndexPointer = m_ppLookupArray[angleIndex]->GetArrayPointer();kt_double maxRange = pScan->GetLaserRangeFinder()->GetMaximumRange();const_forEach(Pose2Vector, &rLocalPoints){const Vector2<kt_double> &rPosition = iter->GetPosition();if (std::isnan(pScan->GetRangeReadings()[readingIndex]) || std::isinf(pScan->GetRangeReadings()[readingIndex])){pAngleIndexPointer[readingIndex] = INVALID_SCAN;readingIndex++;continue;}// counterclockwise rotation and that rotation is about the origin (0, 0).// 将rPosition相对于当前帧的sensor这个点(比如在tutorial1中第一次来这里时是1,1)逆时针旋转angle度,得到offsetVector2<kt_double> offset;offset.SetX(cosine * rPosition.GetX() - sine * rPosition.GetY());offset.SetY(sine * rPosition.GetX() + cosine * rPosition.GetY());// have to compensate for the grid offset when getting the grid index// rPosition 旋转后的坐标,加上 地图中心 与原点间的偏差?地图就是 pCorrelationGridVector2<kt_int32s> gridPoint = m_pGrid->WorldToGrid(offset + rGridOffset);// use base GridIndex to ignore ROI// lookupIndex 就是 雷达点经过旋转之后在地图中的索引值kt_int32s lookupIndex = m_pGrid->Grid<T>::GridIndex(gridPoint, false);// 所以一个角度值 angleIndex 对应的 查找表里 存储的是 一帧雷达数据 经过旋转后在地图中的索引值,// 也就是 只算出了scan旋转后的坐标值,并没有进行实际的雷达数据旋转pAngleIndexPointer[readingIndex] = lookupIndex;readingIndex++;}assert(readingIndex == rLocalPoints.size());}

(2)计算角度协方差和位置协方差

i.void ScanMatcher::ComputePositionalCovariance() 位置协方差计算

/*** Computes the positional covariance of the best pose* @param rBestPose* @param bestResponse* @param rSearchCenter* @param rSearchSpaceOffset* @param rSearchSpaceResolution* @param searchAngleResolution* @param rCovariance*/

void ScanMatcher::ComputePositionalCovariance(const Pose2 &rBestPose, kt_double bestResponse,const Pose2 &rSearchCenter,const Vector2<kt_double> &rSearchSpaceOffset,const Vector2<kt_double> &rSearchSpaceResolution,kt_double searchAngleResolution, Matrix3 &rCovariance)

{// reset covariance to identity matrixrCovariance.SetToIdentity();// if best response is vary small return max varianceif (bestResponse < KT_TOLERANCE){rCovariance(0, 0) = MAX_VARIANCE; // XXrCovariance(1, 1) = MAX_VARIANCE; // YYrCovariance(2, 2) = 4 * math::Square(searchAngleResolution); // TH*THreturn;}kt_double accumulatedVarianceXX = 0;kt_double accumulatedVarianceXY = 0;kt_double accumulatedVarianceYY = 0;kt_double norm = 0;kt_double dx = rBestPose.GetX() - rSearchCenter.GetX();kt_double dy = rBestPose.GetY() - rSearchCenter.GetY();kt_double offsetX = rSearchSpaceOffset.GetX();kt_double offsetY = rSearchSpaceOffset.GetY();kt_int32u nX = static_cast<kt_int32u>(math::Round(offsetX * 2.0 / rSearchSpaceResolution.GetX()) + 1);kt_double startX = -offsetX;assert(math::DoubleEqual(startX + (nX - 1) * rSearchSpaceResolution.GetX(), -startX));kt_int32u nY = static_cast<kt_int32u>(math::Round(offsetY * 2.0 / rSearchSpaceResolution.GetY()) + 1);kt_double startY = -offsetY;assert(math::DoubleEqual(startY + (nY - 1) * rSearchSpaceResolution.GetY(), -startY));for (kt_int32u yIndex = 0; yIndex < nY; yIndex++){kt_double y = startY + yIndex * rSearchSpaceResolution.GetY();for (kt_int32u xIndex = 0; xIndex < nX; xIndex++){kt_double x = startX + xIndex * rSearchSpaceResolution.GetX();Vector2<kt_int32s> gridPoint = m_pSearchSpaceProbs->WorldToGrid(Vector2<kt_double>(rSearchCenter.GetX() + x,rSearchCenter.GetY() + y));kt_double response = *(m_pSearchSpaceProbs->GetDataPointer(gridPoint));// response is not a low responseif (response >= (bestResponse - 0.1)){norm += response;accumulatedVarianceXX += (math::Square(x - dx) * response);accumulatedVarianceXY += ((x - dx) * (y - dy) * response);accumulatedVarianceYY += (math::Square(y - dy) * response);}}}if (norm > KT_TOLERANCE){kt_double varianceXX = accumulatedVarianceXX / norm;kt_double varianceXY = accumulatedVarianceXY / norm;kt_double varianceYY = accumulatedVarianceYY / norm;kt_double varianceTHTH = 4 * math::Square(searchAngleResolution);// lower-bound variances so that they are not too small;// ensures that links are not too tightkt_double minVarianceXX = 0.1 * math::Square(rSearchSpaceResolution.GetX());kt_double minVarianceYY = 0.1 * math::Square(rSearchSpaceResolution.GetY());varianceXX = math::Maximum(varianceXX, minVarianceXX);varianceYY = math::Maximum(varianceYY, minVarianceYY);// increase variance for poorer responseskt_double multiplier = 1.0 / bestResponse;rCovariance(0, 0) = varianceXX * multiplier;rCovariance(0, 1) = varianceXY * multiplier;rCovariance(1, 0) = varianceXY * multiplier;rCovariance(1, 1) = varianceYY * multiplier;rCovariance(2, 2) = varianceTHTH; // this value will be set in ComputeAngularCovariance}// if values are 0, set to MAX_VARIANCE// values might be 0 if points are too sparse and thus don't hit other pointsif (math::DoubleEqual(rCovariance(0, 0), 0.0)){rCovariance(0, 0) = MAX_VARIANCE;}if (math::DoubleEqual(rCovariance(1, 1), 0.0)){rCovariance(1, 1) = MAX_VARIANCE;}

}

ii.void ScanMatcher::ComputeAngularCovariance() 角度协方差计算

/*** Computes the angular covariance of the best pose* @param rBestPose* @param bestResponse* @param rSearchCenter* @param rSearchAngleOffset* @param searchAngleResolution* @param rCovariance*/

void ScanMatcher::ComputeAngularCovariance(const Pose2 &rBestPose,kt_double bestResponse,const Pose2 &rSearchCenter,kt_double searchAngleOffset,kt_double searchAngleResolution,Matrix3 &rCovariance)

{// NOTE: do not reset covariance matrix// normalize angle differencekt_double bestAngle = math::NormalizeAngleDifference(rBestPose.GetHeading(), rSearchCenter.GetHeading());Vector2<kt_int32s> gridPoint = m_pCorrelationGrid->WorldToGrid(rBestPose.GetPosition());kt_int32s gridIndex = m_pCorrelationGrid->GridIndex(gridPoint);kt_int32u nAngles = static_cast<kt_int32u>(math::Round(searchAngleOffset * 2 / searchAngleResolution) + 1);kt_double angle = 0.0;kt_double startAngle = rSearchCenter.GetHeading() - searchAngleOffset;kt_double norm = 0.0;kt_double accumulatedVarianceThTh = 0.0;for (kt_int32u angleIndex = 0; angleIndex < nAngles; angleIndex++){angle = startAngle + angleIndex * searchAngleResolution;kt_double response = GetResponse(angleIndex, gridIndex);// response is not a low responseif (response >= (bestResponse - 0.1)){norm += response;accumulatedVarianceThTh += (math::Square(angle - bestAngle) * response);}}assert(math::DoubleEqual(angle, rSearchCenter.GetHeading() + searchAngleOffset));if (norm > KT_TOLERANCE){if (accumulatedVarianceThTh < KT_TOLERANCE){accumulatedVarianceThTh = math::Square(searchAngleResolution);}accumulatedVarianceThTh /= norm;}else{accumulatedVarianceThTh = 1000 * math::Square(searchAngleResolution);}rCovariance(2, 2) = accumulatedVarianceThTh;

}

这篇关于karto算法论文阅读及代码讲解-扫描匹配(correlative scan matching,csm)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!