本文主要是介绍TiDB入门篇-集群的扩容缩容,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

简介

测试集群的扩容缩容和备份恢复。

参考

使用 TiUP 扩容缩容 TiDB 集群 | PingCAP 文档中心

操作(用tidb的时候出现了错误下面使用root启动集群)

缩容pd以及缩容以后的影响

#如果是tidb的话,在pd扩容会失败root就不会,所以建议启动tidb的时候使用root启动

global:

# # The user who runs the tidb cluster.

user: "root"选做

#销毁集群

tiup cluster destroy tidb-deploy

#重新创建集群检查

tiup cluster check ./topology.yaml --apply --user root -p

#部署集群

tiup cluster deploy tidb-deploy v6.5.1 ./topology.yaml --user root -p

1.查看下开始的集群状态。

tiup cluster display tidb-deploy如下都是up状态

[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.66.20:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.10:2379 pd 192.168.66.10 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:2379 pd 192.168.66.20 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

2.缩容pd节点。

tiup cluster scale-in tidb-deploy --node 192.168.66.10:2379

#查看下缩容以后的结果

tiup cluster display tidb-deploy如下pd变成了2个

[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.66.20:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.20:2379 pd 192.168.66.20 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

尝试下2个pd是否还能够继续使用。

mysql --comments --host 127.0.0.1 --port 4000 -u root -p

use test;

create table test1(name varchar(10));

insert into test1 values('1');

insert into test1 values('1');

insert into test1 values('1');select * from test1;mysql> select * from test1;

+------+

| name |

+------+

| 1 |

| 1 |

| 1 |

+------+

3 rows in set (0.00 sec)

可以看到只有2个pd还是可能正常工作,下面在缩容一个pd。

tiup cluster scale-in tidb-deploy --node 192.168.66.20:2379

#查看下缩容以后的结果

tiup cluster display tidb-deploy[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.66.21:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160测试是否还可以工作

mysql --comments --host 127.0.0.1 --port 4000 -u root -p

use test;

create table test2(name varchar(10));

insert into test2 values('1');

insert into test2 values('1');

insert into test2 values('1');select * from test2;mysql> select * from test2;

+------+

| name |

+------+

| 1 |

| 1 |

| 1 |

| 1 |

+------+

4 rows in set (0.00 sec)

结论:

pd节点的个数主要是保证的高可用,在部署3个节点以后,缩容到2个或者一个都还能够继续工作,唯一不同的就是dashboard在对应的pd缩容以后地址也进行了变化。

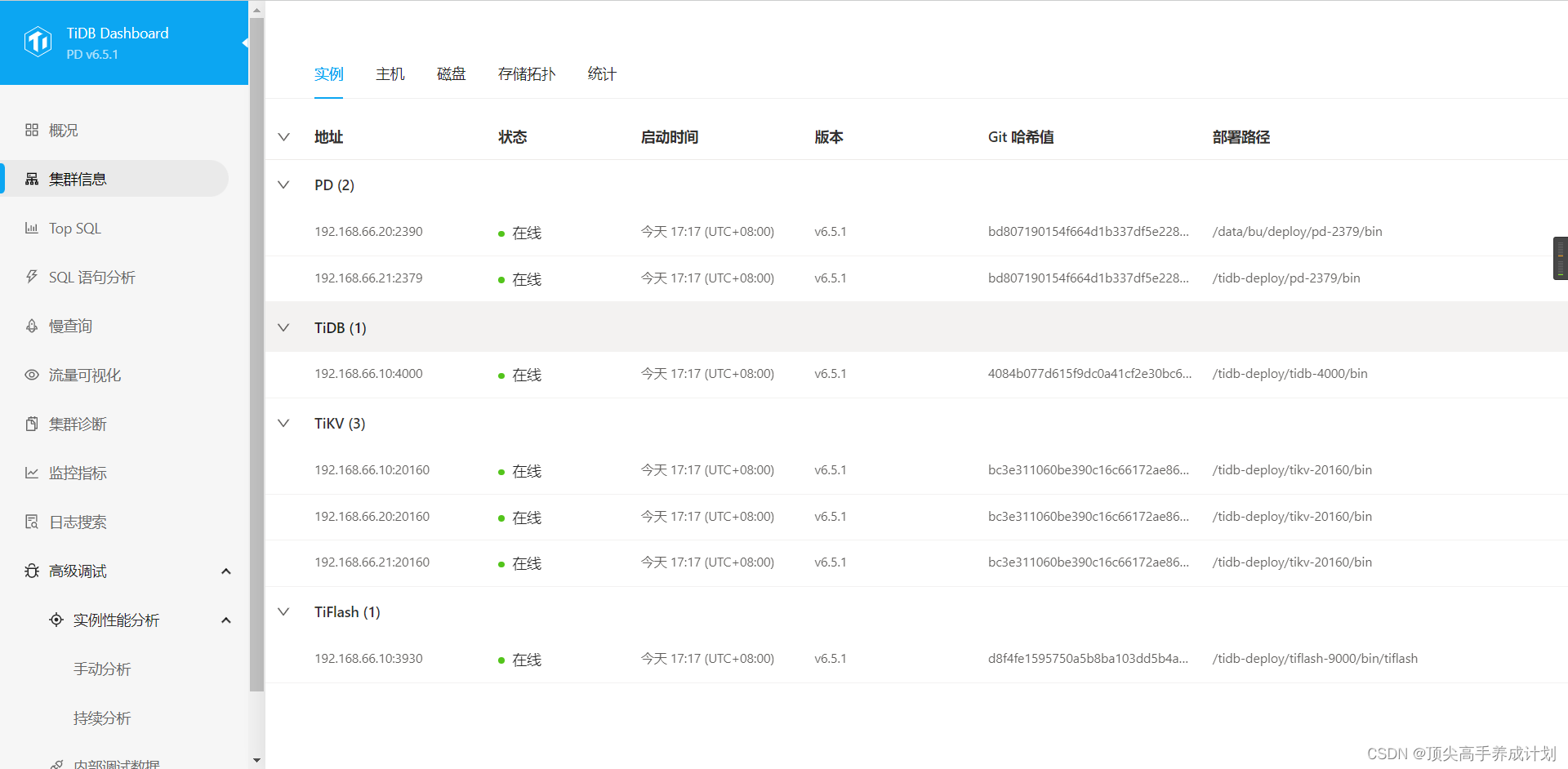

扩容PD

1.编写扩容配置文件。

vi scale-out.yaml

pd_servers:- host: 192.168.66.10ssh_port: 22name: pd-1client_port: 2377peer_port: 2381deploy_dir: /data/nihao/deploy/pd-2379data_dir: /data/nihao/data/pd-2379log_dir: /data/nihao/log/pd-23792.执行下面的命令。

#执行 scale-out 命令前,先使用 check 及 check --apply 命令,检查和自动修复集群存在的潜在风险:

tiup cluster check tidb-deploy scale-out.yaml --cluster --user root -p

#执行检查时会提示需要优化

192.168.66.10 thp Fail THP is enabled, please disable it for best performance

#自动修改存在的风险

tiup cluster check tidb-deploy scale-out.yaml --cluster --apply --user root -p

tiup cluster scale-out tidb-deploy scale-out.yaml -p

tiup cluster display tidb-deploy[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: root

SSH type: builtin

Dashboard URL: http://192.168.66.21:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.20:2390 pd 192.168.66.20 2390/2391 linux/x86_64 Up /data/bu/data/pd-2379 /data/bu/deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

扩容ti_kv

#扩容

vi tikv_scale-out.yaml

tikv_servers:- host: 192.168.66.10ssh_port: 22port: 20161status_port: 20181deploy_dir: /data/deployy/install/deploy/tikv-20160data_dir: /data/deployy/install/data/tikv-20160log_dir: /data/deployy/install/log/tikv-20160tiup cluster scale-out tidb-deploy tikv_scale-out.yaml -p

#显示扩容效果

tiup cluster display tidb-deploy如下所示就有4个tikv了

[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: root

SSH type: builtin

Dashboard URL: http://192.168.66.21:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.20:2390 pd 192.168.66.20 2390/2391 linux/x86_64 Up /data/bu/data/pd-2379 /data/bu/deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.10:20161 tikv 192.168.66.10 20161/20181 linux/x86_64 Up /data/deployy/install/data/tikv-20160 /data/deployy/install/deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

扩容ti_db

vi tidb_scale-out.yaml

tidb_servers:- host: 192.168.66.10ssh_port: 22port: 4001status_port: 10081deploy_dir: /data/deploy/install/deploy/tidb-4000log_dir: /data/deploy/install/log/tidb-4000tiup cluster scale-out tidb-deploy tidb_scale-out.yaml -p

#显示扩容效果

tiup cluster display tidb-deploy显示如下

[root@master ~]# tiup cluster display tidb-deploy

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.11.3/tiup-cluster display tidb-deploy

Cluster type: tidb

Cluster name: tidb-deploy

Cluster version: v6.5.1

Deploy user: root

SSH type: builtin

Dashboard URL: http://192.168.66.21:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.20:2390 pd 192.168.66.20 2390/2391 linux/x86_64 Up /data/bu/data/pd-2379 /data/bu/deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:4001 tidb 192.168.66.10 4001/10081 linux/x86_64 Up - /data/deployyy/install/deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.10:20161 tikv 192.168.66.10 20161/20181 linux/x86_64 Up /data/deployy/install/data/tikv-20160 /data/deployy/install/deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

集群改名

tiup cluster rename tidb-deploy tidb_test

#查看改名后的集群

tiup cluster display tidb_test部署集群示例文件

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:# # The user who runs the tidb cluster.user: "root"# # group is used to specify the group name the user belong to if it's not the same as user.# group: "tidb"# # SSH port of servers in the managed cluster.ssh_port: 22# # Storage directory for cluster deployment files, startup scripts, and configuration files.deploy_dir: "/tidb-deploy"# # TiDB Cluster data storage directorydata_dir: "/tidb-data"# # Supported values: "amd64", "arm64" (default: "amd64")arch: "amd64"# # Resource Control is used to limit the resource of an instance.# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html# # Supports using instance-level `resource_control` to override global `resource_control`.# resource_control:# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#MemoryLimit=bytes# memory_limit: "2G"# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#CPUQuota=# # The percentage specifies how much CPU time the unit shall get at maximum, relative to the total CPU time available on one CPU. Use values > 100% for allotting CPU time on more than one CPU.# # Example: CPUQuota=200% ensures that the executed processes will never get more than two CPU time.# cpu_quota: "200%"# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#IOReadBandwidthMax=device%20bytes# io_read_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"# io_write_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"# # Monitored variables are applied to all the machines.

monitored:# # The communication port for reporting system information of each node in the TiDB cluster.node_exporter_port: 9100# # Blackbox_exporter communication port, used for TiDB cluster port monitoring.blackbox_exporter_port: 9115# # Storage directory for deployment files, startup scripts, and configuration files of monitoring components.# deploy_dir: "/tidb-deploy/monitored-9100"# # Data storage directory of monitoring components.# data_dir: "/tidb-data/monitored-9100"# # Log storage directory of the monitoring component.# log_dir: "/tidb-deploy/monitored-9100/log"# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# # - TiDB: https://pingcap.com/docs/stable/reference/configuration/tidb-server/configuration-file/

# # - TiKV: https://pingcap.com/docs/stable/reference/configuration/tikv-server/configuration-file/

# # - PD: https://pingcap.com/docs/stable/reference/configuration/pd-server/configuration-file/

# # - TiFlash: https://docs.pingcap.com/tidb/stable/tiflash-configuration

# #

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# # ^ ^

# # - example: https://github.com/pingcap/tiup/blob/master/examples/topology.example.yaml.

# # You can overwrite this configuration via the instance-level `config` field.

# server_configs:# tidb:# tikv:# pd:# tiflash:# tiflash-learner:# # Server configs are used to specify the configuration of PD Servers.

pd_servers:# # The ip address of the PD Server.#- host: 10.0.1.11- host: 192.168.66.10- host: 192.168.66.20- host: 192.168.66.21# # SSH port of the server.# ssh_port: 22# # PD Server name# name: "pd-1"# # communication port for TiDB Servers to connect.# client_port: 2379# # Communication port among PD Server nodes.# peer_port: 2380# # PD Server deployment file, startup script, configuration file storage directory.# deploy_dir: "/tidb-deploy/pd-2379"# # PD Server data storage directory.# data_dir: "/tidb-data/pd-2379"# # PD Server log file storage directory.# log_dir: "/tidb-deploy/pd-2379/log"# # numa node bindings.# numa_node: "0,1"# # The following configs are used to overwrite the `server_configs.pd` values.# config:# schedule.max-merge-region-size: 20# schedule.max-merge-region-keys: 200000# - host: 10.0.1.12# ssh_port: 22# name: "pd-1"# client_port: 2379# peer_port: 2380# deploy_dir: "/tidb-deploy/pd-2379"# data_dir: "/tidb-data/pd-2379"# log_dir: "/tidb-deploy/pd-2379/log"# numa_node: "0,1"# config:# schedule.max-merge-region-size: 20# schedule.max-merge-region-keys: 200000# - host: 10.0.1.13# ssh_port: 22# name: "pd-1"# client_port: 2379# peer_port: 2380# deploy_dir: "/tidb-deploy/pd-2379"# data_dir: "/tidb-data/pd-2379"# log_dir: "/tidb-deploy/pd-2379/log"# numa_node: "0,1"# config:# schedule.max-merge-region-size: 20# schedule.max-merge-region-keys: 200000# # Server configs are used to specify the configuration of TiDB Servers.

tidb_servers:# # The ip address of the TiDB Server.#- host: 10.0.1.14- host: 192.168.66.10# # SSH port of the server.# ssh_port: 22# # The port for clients to access the TiDB cluster.# port: 4000# # TiDB Server status API port.# status_port: 10080# # TiDB Server deployment file, startup script, configuration file storage directory.# deploy_dir: "/tidb-deploy/tidb-4000"# # TiDB Server log file storage directory.# log_dir: "/tidb-deploy/tidb-4000/log"# # The ip address of the TiDB Server.#- host: 10.0.1.15# ssh_port: 22# port: 4000# status_port: 10080# deploy_dir: "/tidb-deploy/tidb-4000"# log_dir: "/tidb-deploy/tidb-4000/log"#- host: 10.0.1.16# ssh_port: 22# port: 4000# status_port: 10080# deploy_dir: "/tidb-deploy/tidb-4000"# log_dir: "/tidb-deploy/tidb-4000/log"# # Server configs are used to specify the configuration of TiKV Servers.

tikv_servers:# # The ip address of the TiKV Server.# - host: 10.0.1.17- host: 192.168.66.10- host: 192.168.66.20- host: 192.168.66.21# # SSH port of the server.# ssh_port: 22# # TiKV Server communication port.# port: 20160# # TiKV Server status API port.# status_port: 20180# # TiKV Server deployment file, startup script, configuration file storage directory.# deploy_dir: "/tidb-deploy/tikv-20160"# # TiKV Server data storage directory.# data_dir: "/tidb-data/tikv-20160"# # TiKV Server log file storage directory.# log_dir: "/tidb-deploy/tikv-20160/log"# # The following configs are used to overwrite the `server_configs.tikv` values.# config:# log.level: warn# # The ip address of the TiKV Server.# - host: 10.0.1.18# ssh_port: 22# port: 20160# status_port: 20180# deploy_dir: "/tidb-deploy/tikv-20160"# data_dir: "/tidb-data/tikv-20160"# log_dir: "/tidb-deploy/tikv-20160/log"# config:# log.level: warn# - host: 10.0.1.19# ssh_port: 22# port: 20160# status_port: 20180# deploy_dir: "/tidb-deploy/tikv-20160"# data_dir: "/tidb-data/tikv-20160"# log_dir: "/tidb-deploy/tikv-20160/log"# config:# log.level: warn# # Server configs are used to specify the configuration of TiFlash Servers.

tiflash_servers:# # The ip address of the TiFlash Server.#- host: 10.0.1.20# # SSH port of the server.# ssh_port: 22# # TiFlash TCP Service port.# tcp_port: 9000# # TiFlash HTTP Service port.# http_port: 8123# # TiFlash raft service and coprocessor service listening address.# flash_service_port: 3930# # TiFlash Proxy service port.# flash_proxy_port: 20170# # TiFlash Proxy metrics port.# flash_proxy_status_port: 20292# # TiFlash metrics port.# metrics_port: 8234# # TiFlash Server deployment file, startup script, configuration file storage directory.# deploy_dir: /tidb-deploy/tiflash-9000## With cluster version >= v4.0.9 and you want to deploy a multi-disk TiFlash node, it is recommended to## check config.storage.* for details. The data_dir will be ignored if you defined those configurations.## Setting data_dir to a ','-joined string is still supported but deprecated.## Check https://docs.pingcap.com/tidb/stable/tiflash-configuration#multi-disk-deployment for more details.# # TiFlash Server data storage directory.# data_dir: /tidb-data/tiflash-9000# # TiFlash Server log file storage directory.# log_dir: /tidb-deploy/tiflash-9000/log# # The ip address of the TiKV Server.#- host: 10.0.1.21- host: 192.168.66.10# ssh_port: 22# tcp_port: 9000# http_port: 8123# flash_service_port: 3930# flash_proxy_port: 20170# flash_proxy_status_port: 20292# metrics_port: 8234# deploy_dir: /tidb-deploy/tiflash-9000# data_dir: /tidb-data/tiflash-9000# log_dir: /tidb-deploy/tiflash-9000/log# # Server configs are used to specify the configuration of Prometheus Server.

monitoring_servers:# # The ip address of the Monitoring Server.# - host: 10.0.1.22- host: 192.168.66.20# # SSH port of the server.# ssh_port: 22# # Prometheus Service communication port.# port: 9090# # ng-monitoring servive communication port# ng_port: 12020# # Prometheus deployment file, startup script, configuration file storage directory.# deploy_dir: "/tidb-deploy/prometheus-8249"# # Prometheus data storage directory.# data_dir: "/tidb-data/prometheus-8249"# # Prometheus log file storage directory.# log_dir: "/tidb-deploy/prometheus-8249/log"# # Server configs are used to specify the configuration of Grafana Servers.

grafana_servers:# # The ip address of the Grafana Server.#- host: 10.0.1.22- host: 192.168.66.20# # Grafana web port (browser access)# port: 3000# # Grafana deployment file, startup script, configuration file storage directory.# deploy_dir: /tidb-deploy/grafana-3000# # Server configs are used to specify the configuration of Alertmanager Servers.

alertmanager_servers:# # The ip address of the Alertmanager Server.#- host: 10.0.1.22- host: 192.168.66.20# # SSH port of the server.# ssh_port: 22# # Alertmanager web service port.# web_port: 9093# # Alertmanager communication port.# cluster_port: 9094# # Alertmanager deployment file, startup script, configuration file storage directory.# deploy_dir: "/tidb-deploy/alertmanager-9093"# # Alertmanager data storage directory.# data_dir: "/tidb-data/alertmanager-9093"# # Alertmanager log file storage directory.# log_dir: "/tidb-deploy/alertmanager-9093/log"这篇关于TiDB入门篇-集群的扩容缩容的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!