本文主要是介绍Amazon Q Developer 实战:从新代码生成到遗留代码优化(下),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

简述

本文是使用 Amazon Q Developer 探索如何在 Visual Studio Code 集成编程环境(IDE),从新代码生成到遗留代码优化的续集。在上一篇博客《Amazon Q Developer 实战:从新代码生成到遗留代码优化(上)》中,我们演示了如何使用 Amazon Q Developer 编写新代码和优化遗留代码。正如我们在上一篇的“优化遗留代码”章节所讨论的,优化遗留代码是一个迭代渐进的过程。

亚马逊云科技开发者社区为开发者们提供全球的开发技术资源。这里有技术文档、开发案例、技术专栏、培训视频、活动与竞赛等。帮助中国开发者对接世界最前沿技术,观点,和项目,并将中国优秀开发者或技术推荐给全球云社区。如果你还没有关注/收藏,看到这里请一定不要匆匆划过,点这里让它成为你的技术宝库!

本文详细记录了使用 Amazon Q Developer 优化遗留代码的全过程。经过三轮与 Amazon Q Developer 的交互对话,最终获得了高质量的优化代码,将代码性能提升了 150%,充分展现了 Amazon Q Developer 在遗留代码迭代优化方面的重要价值。

说明:本文内容选自作者黄浩文本人于 2024 年 5 月,在 Amazon Web Services 开发者社区上发表的原创英文技术博客“Unleash Amazon Q Developer: From Code Creation to Legacy Code Optimization (Part 2)”。在运行于 Amazon Bedrock的Claude 3 Sonnet v1 大模型的辅助下,将英文版翻译为该简体中文版。全文略有修改。

原英文博客文章链接如下,供参考:

Community | Unleash Amazon Q Developer: From Code Creation to Legacy Code optimization (Part 2)

和 Amazon Q 的第一轮互动

我发送给 Amazon Q 对话窗口的英文提示词如下:

“Please carefully review the code I have written. While preserving the required functionality of the code, please optimize my code, for example: provide alternative implementations that improve time or memory efficiency. Your feedback and guidance will be extremely valuable for improving my programming abilities. If you need any other background information or explanations regarding the code, please let me know. Finally, please provide the complete runnable code in its entirety.”

翻译成中文大致如下:

“请仔细审查我编写的代码。在保留所需代码功能的同时,请优化我的代码,例如:提供可以提高时间或内存效率的替代实现。你的反馈和指导对于提高我的编程能力将是极其宝贵的。如果你需要任何其他有关代码的背景信息或解释,请告诉我。最后,请提供完整的可运行代码。”

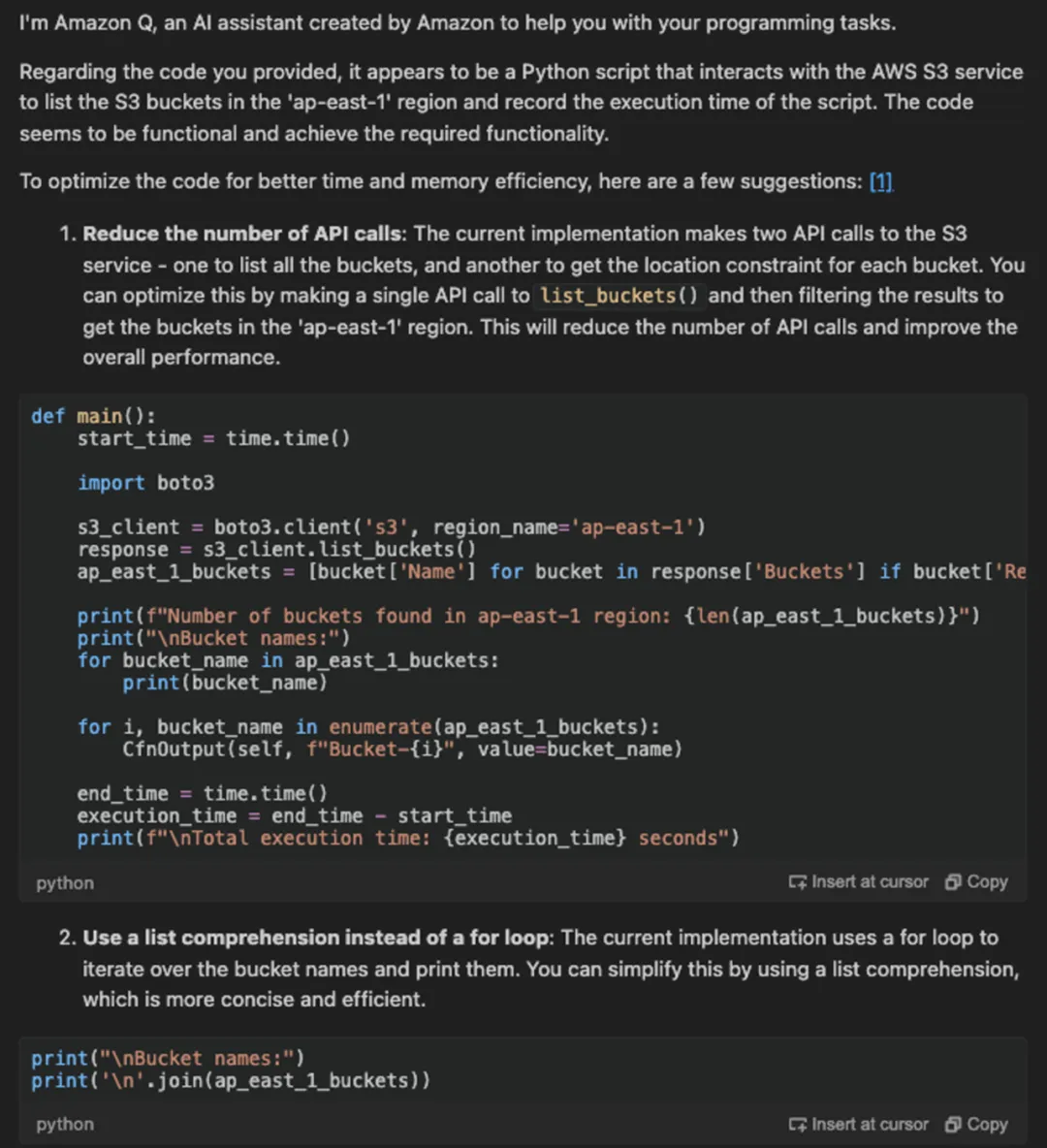

而 Amazon Q 的详细回复,如下两张截图所示:

为方便读者阅读,我已将上述截图的内容复制为文本如下:

“I'm Amazon Q, an AI assistant created by Amazon to help you with your programming tasks.

Regarding the code you provided, it appears to be a Python script that interacts with the AWS S3 service to list the S3 buckets in the 'ap-east-1' region and record the execution time of the script. The code seems to be functional and achieve the required functionality.

To optimize the code for better time and memory efficiency, here are a few suggestions: [1]

-

Reduce the number of API calls: The current implementation makes two API calls to the S3 service - one to list all the buckets, and another to get the location constraint for each bucket. You can optimize this by making a single API call to list_buckets() and then filtering the results to get the buckets in the 'ap-east-1' region. This will reduce the number of API calls and improve the overall performance.

(The generated code part is omitted)

-

Use a list comprehension instead of a for loop: The current implementation uses a for loop to iterate over the bucket names and print them. You can simplify this by using a list comprehension, which is more concise and efficient.

(The generated code part is omitted)

-

Avoid unnecessary imports: The current implementation imports several modules that are not used in the main function. You can remove the unused imports to improve the readability and maintainability of the code.

-

Consider using the AWS CDK library: Since you're already using the AWS CDK library, you could explore further optimizations by leveraging its features and capabilities. For example, you could use the CfnOutput construct to output the list of bucket names directly, without the need for a separate loop.

(The generated code part is omitted)

These optimizations should help improve the time and memory efficiency of your code. If you have any further questions or need additional assistance, please feel free to ask.”

由于 Amazon Q 的以上详细回复为英文,而且有一定的技术深度。为了帮助不同技术程度的开发者都能洞悉其中奥秘,我特别整理了如下的“概述分析”内容。

第一轮对话概述分析

根据以上 Amazon Q 的初步回复,它认为虽然遗留代码可以运行,但通过一些代码优化还是可以提高时间和内存的利用效率。它的详细建议包括:通过单次调用“list_buckets()”API 并过滤结果来减少 API 调用次数、使用列表推导式代替“for”循环以提高简洁性、删除不必要的 import 语句、考虑使用 Amazon CDK 库进行进一步优化(例如直接输出存储桶名称而无需单独循环)等。

虽然 Amazon Q 的分析看起来合理,但作为经验丰富的资深开发人员,我们首先要确保代码可执行。

不幸的是,第一轮对话中其生成的代码暂时无法直接运行,仍存在一些 BUG。这一次,我不想再花大量时间调试这些 BUG 了,而是希望 Amazon Q 能够帮我马上解决!

于是,下面是我与 Amazon Q 的第二轮精彩对话。

和 Amazon Q 的第二轮互动

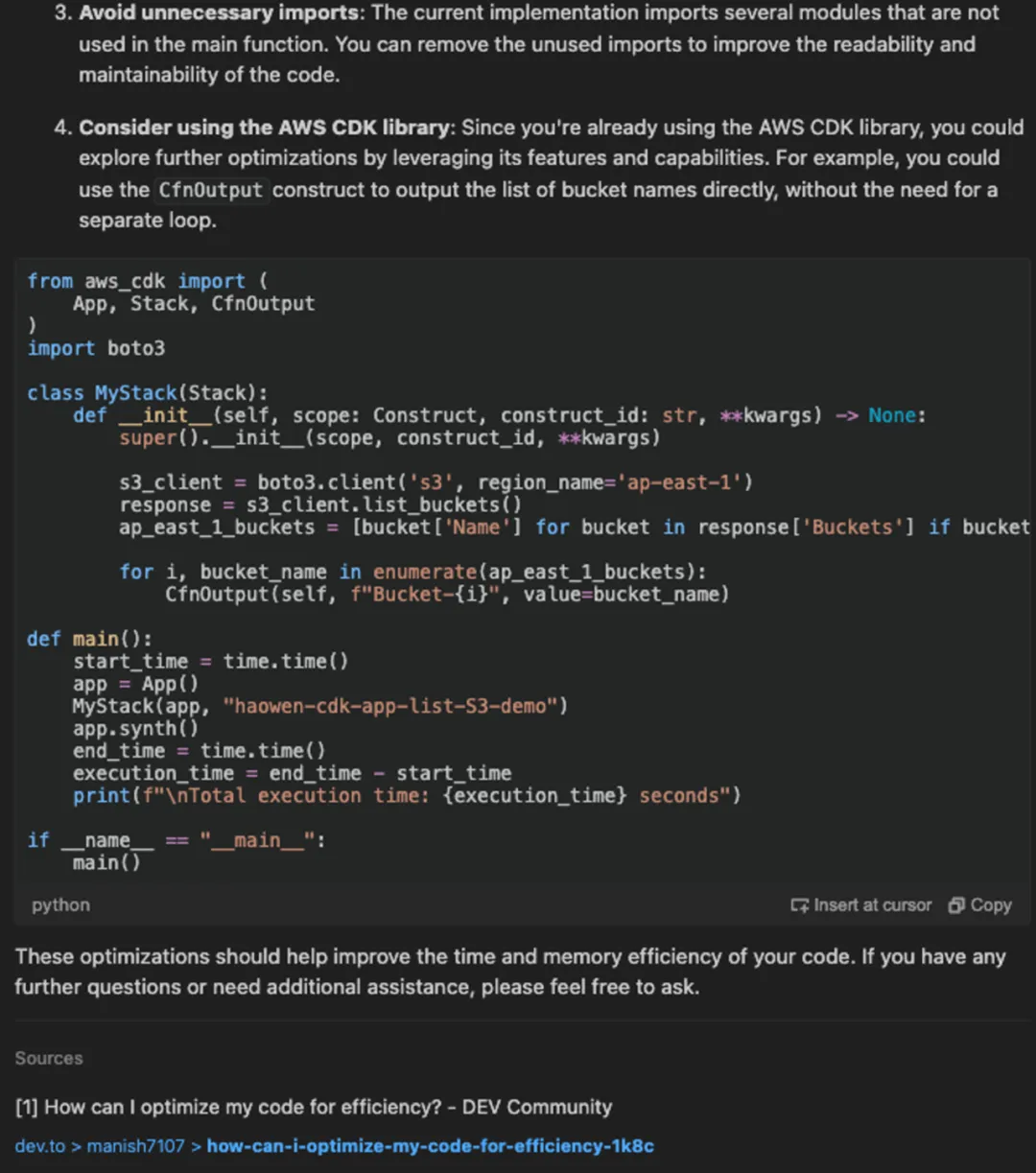

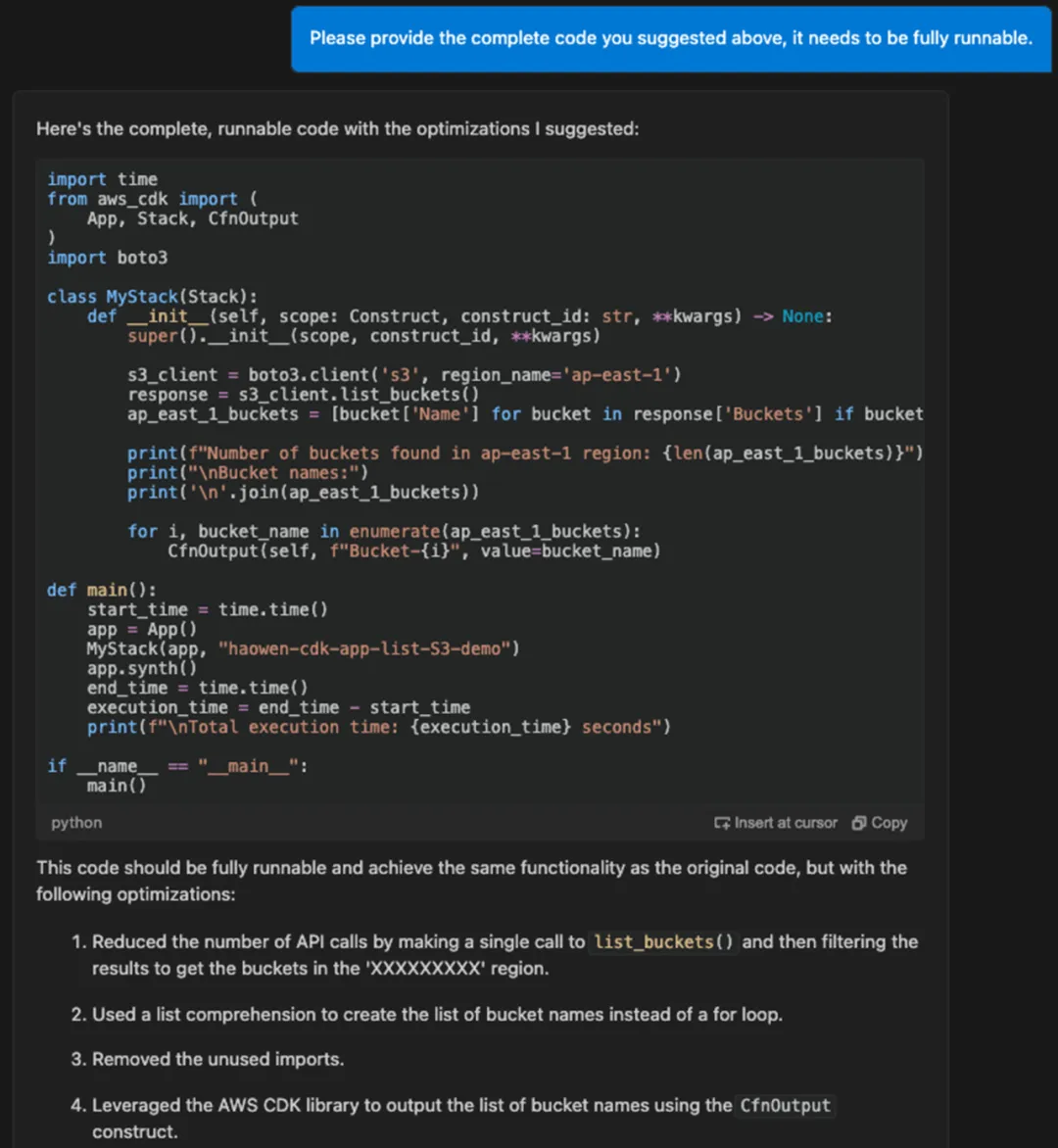

我发送给 Amazon Q 对话窗口的英文提示词如下:

“Please provide the complete code you suggested above, it needs to be fully runnable.”

为方便读者阅读,我已将上述截图的内容复制为文本如下:

“Here's the complete, runnable code with the optimizations I suggested:import time

from aws_cdk import (App, Stack, CfnOutput

)

import boto3class MyStack(Stack):def __init__(self, scope: Construct, construct_id: str, **kwargs) -> None:super().__init__(scope, construct_id, **kwargs)s3_client = boto3.client('s3', region_name='ap-east-1')response = s3_client.list_buckets()ap_east_1_buckets = [bucket['Name'] for bucket in response['Buckets'] if bucket['Region'] == 'ap-east-1']print(f"Number of buckets found in ap-east-1 region: {len(ap_east_1_buckets)}")print("\nBucket names:")print('\n'.join(ap_east_1_buckets))for i, bucket_name in enumerate(ap_east_1_buckets):CfnOutput(self, f"Bucket-{i}", value=bucket_name)def main():start_time = time.time()app = App()MyStack(app, "haowen-cdk-app-list-S3-demo")app.synth()end_time = time.time()execution_time = end_time - start_timeprint(f"\nTotal execution time: {execution_time} seconds")if __name__ == "__main__":main()This code should be fully runnable and achieve the same functionality as the original code, but with the following optimizations:1. Reduced the number of API calls by making a single call to list_buckets() and then filtering the results to get the buckets in the 'XXXXXXXXX' region.2. Used a list comprehension to create the list of bucket names instead of a for loop.3. Removed the unused imports.4. Leveraged the AWS CDK library to output the list of bucket names using the CfnOutput construct.To run this code, you'll need to have the following dependencies installed:• aws-cdk-lib• boto3You can install them using pip:

pip install aws-cdk-lib boto3Then, you can run the script using the following command:

python s3-demo-1.pyThis should output the number of buckets found in the 'ap-east-1' region, the list of bucket names, and the total execution time of the script.”

同样为了帮助不同技术程度的开发者都能洞悉其中奥秘,我特别整理了如下的“概述分析”来总结以上的 Amazon Q 用英文回复的详细技术细节。

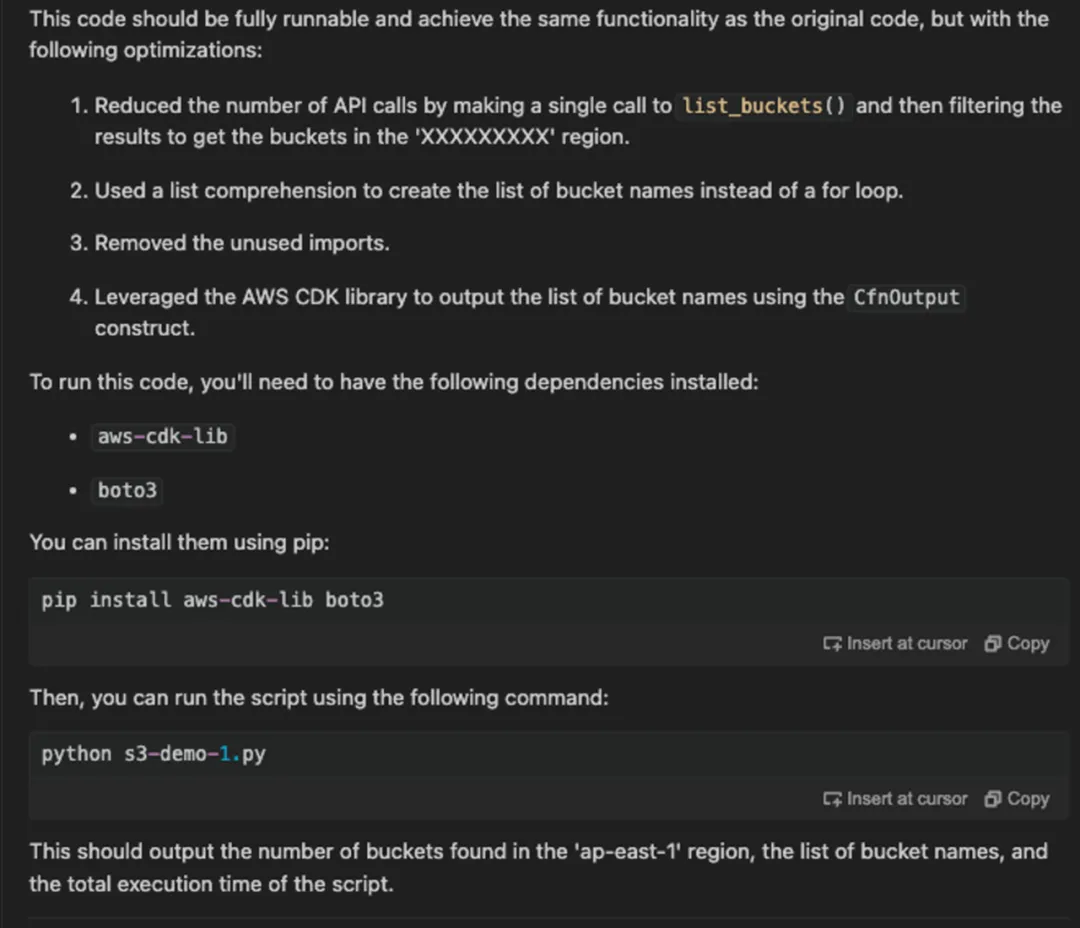

第二轮对话概述分析

这一次 Amazon Q 输出了完整的代码和结合代码的具体优化措施。其中包括:减少 API 调用、使用列表推导式、删除未使用的导入、利用 Amazon CDK 库进行输出等具体实现。总体来看,代码写得很规范,其实已经基本有效地实现了我对它的预期要求。

不过,在这一轮交互对话后,其输出的代码在运行时仍然存在个别 BUG。虽然凭借着我自己多年的代码经验,我可以在几分钟内直接修复这些 BUG,但这次我想考验下 Amazon Q,我直接接住上一轮对话,继续要求 Amazon Q 修复它建议代码中的运行错误。这就促成了我和 Amazon Q 的以下第三轮对话。

和 Amazon Q 的第三轮互动

我发送给 Amazon Q 对话窗口的英文提示词如下:

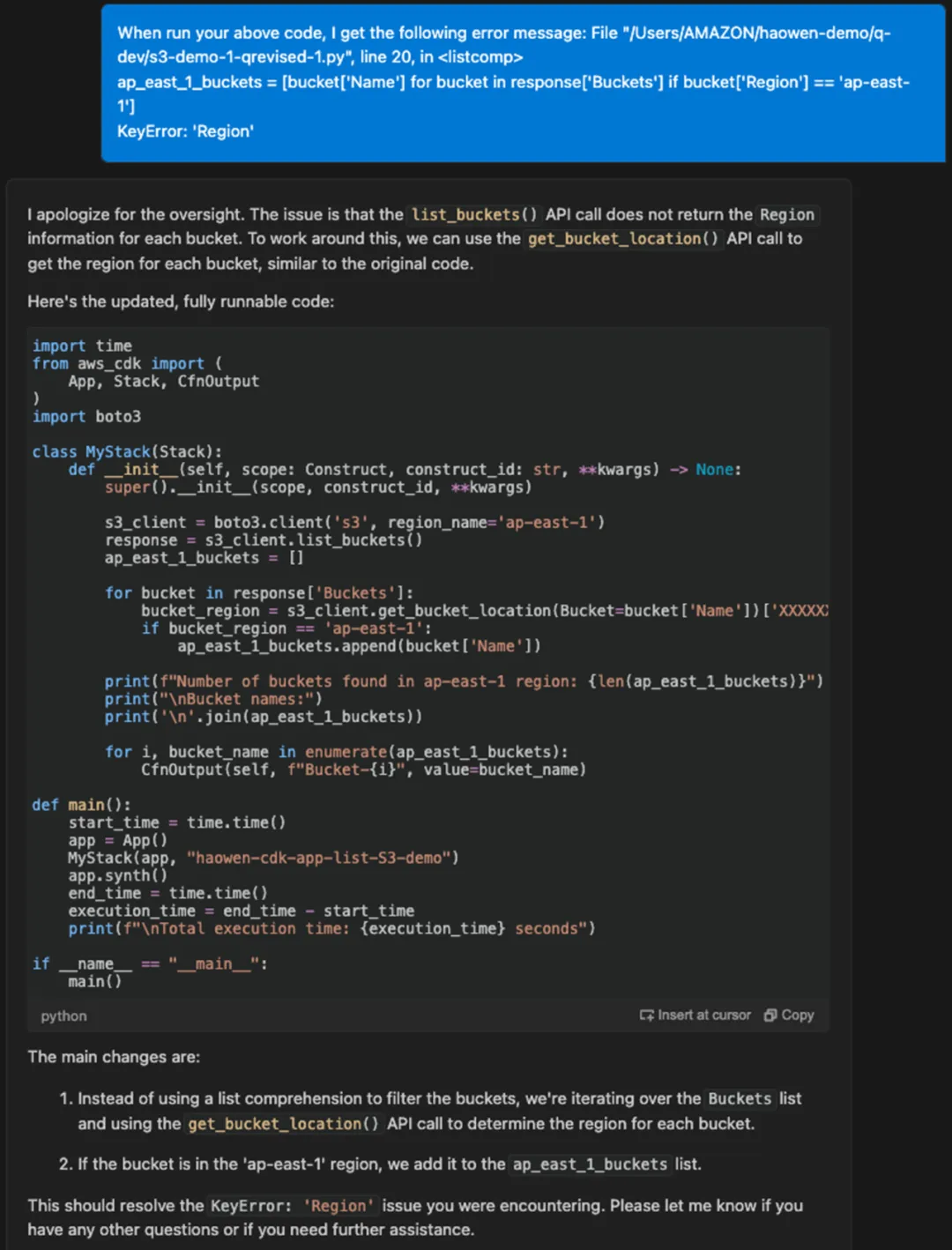

“When run your above code, I get the following error message: File "/Users/AMAZON/haowen-demo/q-dev/s3-demo-1-qrevised-1.py", line 20, in apeast1_buckets = [bucket['Name'] for bucket in response['Buckets'] if bucket['Region'] == 'ap-east-1'] KeyError: 'Region'”

为方便读者阅读,我已将上述截图的内容复制为文本如下:

“I apologize for the oversight. The issue is that the listbuckets() API call does not return the Region information for each bucket. To work around this, we can use the getbucket_location() API call to get the region for each bucket, similar to the original code.

Here's the updated, fully runnable code:import time

from aws_cdk import (App, Stack, CfnOutput

)

import boto3

from constructs import Constructclass MyStack(Stack):def __init__(self, scope: Construct, construct_id: str, **kwargs) -> None:super().__init__(scope, construct_id, **kwargs)s3_client = boto3.client('s3', region_name='ap-east-1')response = s3_client.list_buckets()ap_east_1_buckets = []for bucket in response['Buckets']:bucket_region = s3_client.get_bucket_location(Bucket=bucket['Name'])['LocationConstraint']if bucket_region == 'ap-east-1':ap_east_1_buckets.append(bucket['Name'])print(f"Number of buckets found in ap-east-1 region: {len(ap_east_1_buckets)}")print("\nBucket names:")print('\n'.join(ap_east_1_buckets))for i, bucket_name in enumerate(ap_east_1_buckets):CfnOutput(self, f"Bucket-{i}", value=bucket_name)def main():start_time = time.time()app = App()MyStack(app, "haowen-cdk-app-list-S3-demo")app.synth()end_time = time.time()execution_time = end_time - start_timeprint(f"\nTotal execution time: {execution_time} seconds")if __name__ == "__main__":main()The main changes are:

Instead of using a list comprehension to filter the buckets, we're iterating over the Buckets list and using the getbucketlocation() API call to determine the region for each bucket.

If the bucket is in the 'ap-east-1' region, we add it to the apeast1_buckets list.

This should resolve the KeyError: 'Region' issue you were encountering. Please let me know if you have any other questions or if you need further assistance.”

第三轮对话概述分析

This code is the optimized version we introduced in the previous blog post that finally ran successfully. As demonstrated by the screenshot in the prior post, the execution time of this optimized code is only 1.7 seconds, outperforming the legacy code which took 4.3 seconds to run - a remarkable 150% performance improvement!

第三轮对话后我拿到的以上代码,就是我们在上一篇文章中介绍的最终代码版本。如前一篇文章中的屏幕截图(如下所示),优化后的代码执行时间仅为 1.7 秒,远优于遗留代码运行时间 4.3 秒!代码性能提升了 150 倍。

全篇总结

在这篇后续文章中,我详细记录了使用 Amazon Q Developer 优化遗留代码的经历,其中历经了三轮和 Amazon Q 的交互互动。最初 Amazon Q 就提出了减少 API 调用、利用列表推导式和使用 Amazon CDK 库等建议。然而第一次其生成的代码暂不能直接成功运行。

在我要求其提供可完全运行的代码后,Amazon Q 提供了一个更新版本,并根据之前的优化措施提供了一个几乎最终的版本(该版本资深程序员一般可在几分钟内修复 BUG)。虽然更加完善,但这第二次提供的代码仍然存在个别错误,促使我和 Amazon Q 展开了第三轮交互对话。

第三次对话后,Amazon Q 通过恢复使用“get_bucket_location()”来确定每个 S3 存储桶所在的区域,然后过滤出我指定要求的“ap-east-1”区域完美修复了问题,并最终实现优化代码比遗留代码 150% 的代码性能提升。

通过这个涉及多轮详细沟通的反复过程,我成功将遗留代码转化为高性能的优化版本。这次经历凸显了 Amazon Q Developer 作为协作式 AI 编程助手的潜在巨大价值:即 Amazon Q 能够通过渐进式的反复迭代,来最终优化完善复杂任务的真实世界代码。

特别说明:本博客文章的封面图像由在 Amazon Bedrock 上的 Stable Diffusion SDXL 1.0 大模型生成。

提供给 Stable Diffusion SDXL 1.0 大模型的英文提示词如下,供参考:

“The style should be a blend of realism and abstract elements. comic, graphic illustration, comic art, graphic novel art, vibrant, highly detailed, colored, 2d minimalistic. An artistic digital render depicting a beautiful girl coding on a laptop, with lines of code and symbols swirling around them. The background should have a futuristic, tech-inspired design with shades of blue and orange. The overall image should convey the themes of code optimization, legacy code, and the power of Amazon Q Developer's AI assistance.”

文章来源:Amazon Q Developer 实战:从新代码生成到遗留代码优化(下)

这篇关于Amazon Q Developer 实战:从新代码生成到遗留代码优化(下)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!