本文主要是介绍安装OpenEBS,镜像总是报错ImagePullBackOff或者ErrImagePull的解决方法,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

按照 KubeSphere 官方文档安装 OpenEBS,镜像总是报错ImagePullBackOff或者ErrImagePull的解决方法

helm 有很多更换 源 的文章,有一些是写更换阿里云的源,但是阿里云的源根本没更新OpenEBS的镜像。

在网上找到1个可用的源:

可用的源:

stable: http://mirror.azure.cn/kubernetes/charts/

无用的源:

http://mirror.azure.cn/kubernetes/charts-incubator/

https://kubernetes-charts.storage.googleapis.com/ 拒绝访问

https://registry.cn-hangzhou.aliyuncs.com/ 无法访问

https://quay.io/ 被墙

不可用的源执行 helm repo add … 命令会报如下错误:

[root@k8snode1 etcd]# helm repo add incubator http://mirror.azure.cn/kubernetes/charts-incubator/

WARNING: Deprecated index file format. Try 'helm repo update'

Error: Looks like "http://mirror.azure.cn/kubernetes/charts-incubator/" is not a valid chart repository or cannot be reached: no API version specified

所以把helm的源替换成上面的 http://mirror.azure.cn/kubernetes/charts/

# 先移除旧的源,如果没有添加过旧的源,请忽略

helm repo remove stable# 添加新的源

helm repo add stable http://mirror.azure.cn/kubernetes/charts/# 更新源

helm repo update

开始安装OpenEBS

helm install --namespace openebs --name openebs stable/openebs --version 1.5.0

执行命令 kubectl get pods --all-namespaces 发现 openebs的镜像总是报错ImagePullBackOff或者ErrImagePull 。

kubectl get pods --all-namespaces

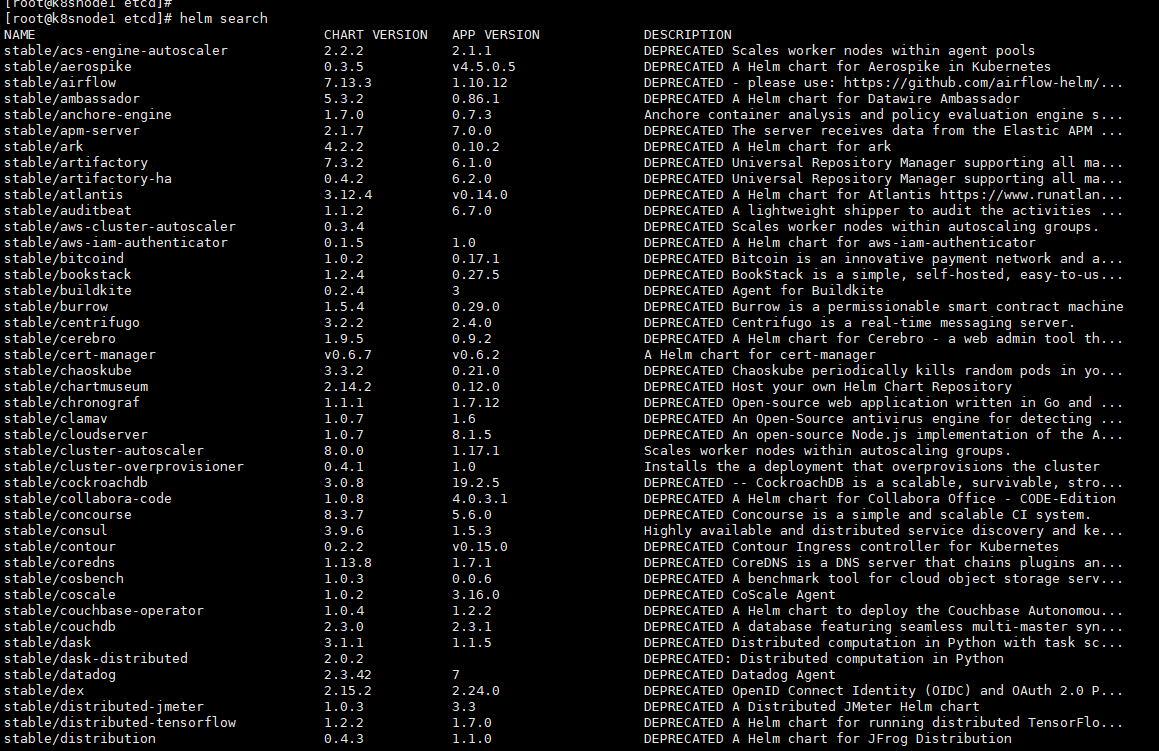

执行 helm search 发现并没有 openebs 1.5.0 的镜像。

helm search

所以,上面添加了新的源也没用,还得想其他办法将 镜像 拉取到本地。

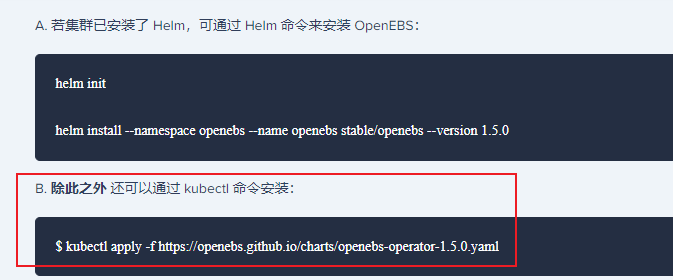

官方给出了2种安装 OpenEBS的方法:

我们先从 https://openebs.github.io/charts/openebs-operator-1.5.0.yaml 这里将 openebs-operator-1.5.0.yaml这个文件下载下来。

注:https://openebs.github.io/charts/openebs-operator-1.5.0.yaml 这个链接已经失效,打不开了。

openebs-operator-1.5.0.yaml 文件内容:

# This manifest deploys the OpenEBS control plane components, with associated CRs & RBAC rules

# NOTE: On GKE, deploy the openebs-operator.yaml in admin context# Create the OpenEBS namespace

apiVersion: v1

kind: Namespace

metadata:name: openebs

---

# Create Maya Service Account

apiVersion: v1

kind: ServiceAccount

metadata:name: openebs-maya-operatornamespace: openebs

---

# Define Role that allows operations on K8s pods/deployments

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: openebs-maya-operator

rules:

- apiGroups: ["*"]resources: ["nodes", "nodes/proxy"]verbs: ["*"]

- apiGroups: ["*"]resources: ["namespaces", "services", "pods", "pods/exec", "deployments", "deployments/finalizers", "replicationcontrollers", "replicasets", "events", "endpoints", "configmaps", "secrets", "jobs", "cronjobs"]verbs: ["*"]

- apiGroups: ["*"]resources: ["statefulsets", "daemonsets"]verbs: ["*"]

- apiGroups: ["*"]resources: ["resourcequotas", "limitranges"]verbs: ["list", "watch"]

- apiGroups: ["*"]resources: ["ingresses", "horizontalpodautoscalers", "verticalpodautoscalers", "poddisruptionbudgets", "certificatesigningrequests"]verbs: ["list", "watch"]

- apiGroups: ["*"]resources: ["storageclasses", "persistentvolumeclaims", "persistentvolumes"]verbs: ["*"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]resources: ["volumesnapshots", "volumesnapshotdatas"]verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["apiextensions.k8s.io"]resources: ["customresourcedefinitions"]verbs: [ "get", "list", "create", "update", "delete", "patch"]

- apiGroups: ["*"]resources: [ "disks", "blockdevices", "blockdeviceclaims"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpoolclusters", "storagepoolclaims", "storagepoolclaims/finalizers", "cstorpoolclusters/finalizers", "storagepools"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "castemplates", "runtasks"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpools", "cstorpools/finalizers", "cstorvolumereplicas", "cstorvolumes", "cstorvolumeclaims"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpoolinstances", "cstorpoolinstances/finalizers"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorbackups", "cstorrestores", "cstorcompletedbackups"]verbs: ["*" ]

- apiGroups: ["coordination.k8s.io"]resources: ["leases"]verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: ["admissionregistration.k8s.io"]resources: ["validatingwebhookconfigurations", "mutatingwebhookconfigurations"]verbs: ["get", "create", "list", "delete", "update", "patch"]

- nonResourceURLs: ["/metrics"]verbs: ["get"]

- apiGroups: ["*"]resources: [ "upgradetasks"]verbs: ["*" ]

---

# Bind the Service Account with the Role Privileges.

# TODO: Check if default account also needs to be there

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: openebs-maya-operator

subjects:

- kind: ServiceAccountname: openebs-maya-operatornamespace: openebs

roleRef:kind: ClusterRolename: openebs-maya-operatorapiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:name: maya-apiservernamespace: openebslabels:name: maya-apiserveropenebs.io/component-name: maya-apiserveropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: maya-apiserveropenebs.io/component-name: maya-apiserverreplicas: 1strategy:type: RecreaterollingUpdate: nulltemplate:metadata:labels:name: maya-apiserveropenebs.io/component-name: maya-apiserveropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: maya-apiserverimagePullPolicy: IfNotPresentimage: quay.io/openebs/m-apiserver:1.5.0ports:- containerPort: 5656env:# OPENEBS_IO_KUBE_CONFIG enables maya api service to connect to K8s# based on this config. This is ignored if empty.# This is supported for maya api server version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"# OPENEBS_IO_K8S_MASTER enables maya api service to connect to K8s# based on this address. This is ignored if empty.# This is supported for maya api server version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://172.28.128.3:8080"# OPENEBS_NAMESPACE provides the namespace of this deployment as an# environment variable- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as# environment variable- name: OPENEBS_SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName# OPENEBS_MAYA_POD_NAME provides the name of this pod as# environment variable- name: OPENEBS_MAYA_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name# If OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG is false then OpenEBS default# storageclass and storagepool will not be created.- name: OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIGvalue: "true"# OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL decides whether default cstor sparse pool should be# configured as a part of openebs installation.# If "true" a default cstor sparse pool will be configured, if "false" it will not be configured.# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true- name: OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOLvalue: "false"# OPENEBS_IO_CSTOR_TARGET_DIR can be used to specify the hostpath# to be used for saving the shared content between the side cars# of cstor volume pod.# The default path used is /var/openebs/sparse#- name: OPENEBS_IO_CSTOR_TARGET_DIR# value: "/var/openebs/sparse"# OPENEBS_IO_CSTOR_POOL_SPARSE_DIR can be used to specify the hostpath# to be used for saving the shared content between the side cars# of cstor pool pod. This ENV is also used to indicate the location# of the sparse devices.# The default path used is /var/openebs/sparse#- name: OPENEBS_IO_CSTOR_POOL_SPARSE_DIR# value: "/var/openebs/sparse"# OPENEBS_IO_JIVA_POOL_DIR can be used to specify the hostpath# to be used for default Jiva StoragePool loaded by OpenEBS# The default path used is /var/openebs# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true#- name: OPENEBS_IO_JIVA_POOL_DIR# value: "/var/openebs"# OPENEBS_IO_LOCALPV_HOSTPATH_DIR can be used to specify the hostpath# to be used for default openebs-hostpath storageclass loaded by OpenEBS# The default path used is /var/openebs/local# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true#- name: OPENEBS_IO_LOCALPV_HOSTPATH_DIR# value: "/var/openebs/local"- name: OPENEBS_IO_JIVA_CONTROLLER_IMAGEvalue: "quay.io/openebs/jiva:1.5.0"- name: OPENEBS_IO_JIVA_REPLICA_IMAGEvalue: "quay.io/openebs/jiva:1.5.0"- name: OPENEBS_IO_JIVA_REPLICA_COUNTvalue: "3"- name: OPENEBS_IO_CSTOR_TARGET_IMAGEvalue: "quay.io/openebs/cstor-istgt:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_IMAGEvalue: "quay.io/openebs/cstor-pool:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_MGMT_IMAGEvalue: "quay.io/openebs/cstor-pool-mgmt:1.5.0"- name: OPENEBS_IO_CSTOR_VOLUME_MGMT_IMAGEvalue: "quay.io/openebs/cstor-volume-mgmt:1.5.0"- name: OPENEBS_IO_VOLUME_MONITOR_IMAGEvalue: "quay.io/openebs/m-exporter:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_EXPORTER_IMAGEvalue: "quay.io/openebs/m-exporter:1.5.0"- name: OPENEBS_IO_HELPER_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"# OPENEBS_IO_ENABLE_ANALYTICS if set to true sends anonymous usage# events to Google Analytics- name: OPENEBS_IO_ENABLE_ANALYTICSvalue: "true"- name: OPENEBS_IO_INSTALLER_TYPEvalue: "openebs-operator"# OPENEBS_IO_ANALYTICS_PING_INTERVAL can be used to specify the duration (in hours)# for periodic ping events sent to Google Analytics.# Default is 24h.# Minimum is 1h. You can convert this to weekly by setting 168h#- name: OPENEBS_IO_ANALYTICS_PING_INTERVAL# value: "24h"livenessProbe:exec:command:- /usr/local/bin/mayactl- versioninitialDelaySeconds: 30periodSeconds: 60readinessProbe:exec:command:- /usr/local/bin/mayactl- versioninitialDelaySeconds: 30periodSeconds: 60

---

apiVersion: v1

kind: Service

metadata:name: maya-apiserver-servicenamespace: openebslabels:openebs.io/component-name: maya-apiserver-svc

spec:ports:- name: apiport: 5656protocol: TCPtargetPort: 5656selector:name: maya-apiserversessionAffinity: None

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-provisionernamespace: openebslabels:name: openebs-provisioneropenebs.io/component-name: openebs-provisioneropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-provisioneropenebs.io/component-name: openebs-provisionerreplicas: 1strategy:type: RecreaterollingUpdate: nulltemplate:metadata:labels:name: openebs-provisioneropenebs.io/component-name: openebs-provisioneropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: openebs-provisionerimagePullPolicy: IfNotPresentimage: quay.io/openebs/openebs-k8s-provisioner:1.5.0env:# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s# based on this address. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://10.128.0.12:8080"# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s# based on this config. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that provisioner should forward the volume create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"livenessProbe:exec:command:- pgrep- ".*openebs"initialDelaySeconds: 30periodSeconds: 60

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-snapshot-operatornamespace: openebslabels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatoropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatorreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatoropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: snapshot-controllerimage: quay.io/openebs/snapshot-controller:1.5.0imagePullPolicy: IfNotPresentenv:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacelivenessProbe:exec:command:- pgrep- ".*controller"initialDelaySeconds: 30periodSeconds: 60# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that snapshot controller should forward the snapshot create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"- name: snapshot-provisionerimage: quay.io/openebs/snapshot-provisioner:1.5.0imagePullPolicy: IfNotPresentenv:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that snapshot provisioner should forward the clone create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"livenessProbe:exec:command:- pgrep- ".*provisioner"initialDelaySeconds: 30periodSeconds: 60

---

# This is the node-disk-manager related config.

# It can be used to customize the disks probes and filters

apiVersion: v1

kind: ConfigMap

metadata:name: openebs-ndm-confignamespace: openebslabels:openebs.io/component-name: ndm-config

data:# udev-probe is default or primary probe which should be enabled to run ndm# filterconfigs contails configs of filters - in their form fo include# and exclude comma separated stringsnode-disk-manager.config: |probeconfigs:- key: udev-probename: udev probestate: true- key: seachest-probename: seachest probestate: false- key: smart-probename: smart probestate: truefilterconfigs:- key: os-disk-exclude-filtername: os disk exclude filterstate: trueexclude: "/,/etc/hosts,/boot"- key: vendor-filtername: vendor filterstate: trueinclude: ""exclude: "CLOUDBYT,OpenEBS"- key: path-filtername: path filterstate: trueinclude: ""exclude: "loop,/dev/fd0,/dev/sr0,/dev/ram,/dev/dm-,/dev/md"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: openebs-ndmnamespace: openebslabels:name: openebs-ndmopenebs.io/component-name: ndmopenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-ndmopenebs.io/component-name: ndmupdateStrategy:type: RollingUpdatetemplate:metadata:labels:name: openebs-ndmopenebs.io/component-name: ndmopenebs.io/version: 1.5.0spec:# By default the node-disk-manager will be run on all kubernetes nodes# If you would like to limit this to only some nodes, say the nodes# that have storage attached, you could label those node and use# nodeSelector.## e.g. label the storage nodes with - "openebs.io/nodegroup"="storage-node"# kubectl label node <node-name> "openebs.io/nodegroup"="storage-node"#nodeSelector:# "openebs.io/nodegroup": "storage-node"serviceAccountName: openebs-maya-operatorhostNetwork: truecontainers:- name: node-disk-managerimage: quay.io/openebs/node-disk-manager-amd64:v0.4.5imagePullPolicy: AlwayssecurityContext:privileged: truevolumeMounts:- name: configmountPath: /host/node-disk-manager.configsubPath: node-disk-manager.configreadOnly: true- name: udevmountPath: /run/udev- name: procmountmountPath: /host/procreadOnly: true- name: sparsepathmountPath: /var/openebs/sparseenv:# namespace in which NDM is installed will be passed to NDM Daemonset# as environment variable- name: NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# pass hostname as env variable using downward API to the NDM container- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName# specify the directory where the sparse files need to be created.# if not specified, then sparse files will not be created.- name: SPARSE_FILE_DIRvalue: "/var/openebs/sparse"# Size(bytes) of the sparse file to be created.- name: SPARSE_FILE_SIZEvalue: "10737418240"# Specify the number of sparse files to be created- name: SPARSE_FILE_COUNTvalue: "0"livenessProbe:exec:command:- pgrep- ".*ndm"initialDelaySeconds: 30periodSeconds: 60volumes:- name: configconfigMap:name: openebs-ndm-config- name: udevhostPath:path: /run/udevtype: Directory# mount /proc (to access mount file of process 1 of host) inside container# to read mount-point of disks and partitions- name: procmounthostPath:path: /proctype: Directory- name: sparsepathhostPath:path: /var/openebs/sparse

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-ndm-operatornamespace: openebslabels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatoropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatorreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatoropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: node-disk-operatorimage: quay.io/openebs/node-disk-operator-amd64:v0.4.5imagePullPolicy: AlwaysreadinessProbe:exec:command:- stat- /tmp/operator-sdk-readyinitialDelaySeconds: 4periodSeconds: 10failureThreshold: 1env:- name: WATCH_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name# the service account of the ndm-operator pod- name: SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName- name: OPERATOR_NAMEvalue: "node-disk-operator"- name: CLEANUP_JOB_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-admission-servernamespace: openebslabels:app: admission-webhookopenebs.io/component-name: admission-webhookopenebs.io/version: 1.5.0

spec:replicas: 1strategy:type: RecreaterollingUpdate: nullselector:matchLabels:app: admission-webhooktemplate:metadata:labels:app: admission-webhookopenebs.io/component-name: admission-webhookopenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: admission-webhookimage: quay.io/openebs/admission-server:1.5.0imagePullPolicy: IfNotPresentargs:- -alsologtostderr- -v=2- 2>&1env:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: ADMISSION_WEBHOOK_NAMEvalue: "openebs-admission-server"

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-localpv-provisionernamespace: openebslabels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisioneropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisionerreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisioneropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: openebs-provisioner-hostpathimagePullPolicy: Alwaysimage: quay.io/openebs/provisioner-localpv:1.5.0env:# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s# based on this address. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://10.128.0.12:8080"# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s# based on this config. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as# environment variable- name: OPENEBS_SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName- name: OPENEBS_IO_ENABLE_ANALYTICSvalue: "true"- name: OPENEBS_IO_INSTALLER_TYPEvalue: "openebs-operator"- name: OPENEBS_IO_HELPER_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"livenessProbe:exec:command:- pgrep- ".*localpv"initialDelaySeconds: 30periodSeconds: 60

---分析上面的文件内容,我们可以看到,它需要从 quay.io 网站中 拉取这些 openebs 的镜像。但是这个网站被墙了,所以拉不下来。

containers:- name: maya-apiserverimagePullPolicy: IfNotPresentimage: quay.io/openebs/m-apiserver:1.5.0ports:- containerPort: 5656————————————————————————————————————————————————containers:- name: openebs-provisionerimagePullPolicy: IfNotPresentimage: quay.io/openebs/openebs-k8s-provisioner:1.5.0————————————————————————————————————————————————containers:- name: snapshot-controllerimage: quay.io/openebs/snapshot-controller:1.5.0imagePullPolicy: IfNotPresent————————————————————————————————————————————————containers:- name: node-disk-managerimage: quay.io/openebs/node-disk-manager-amd64:v0.4.5imagePullPolicy: Always————————————————————————————————————————————————containers:- name: node-disk-operatorimage: quay.io/openebs/node-disk-operator-amd64:v0.4.5imagePullPolicy: Always————————————————————————————————————————————————containers:- name: admission-webhookimage: quay.io/openebs/admission-server:1.5.0imagePullPolicy: IfNotPresent————————————————————————————————————————————————

containers:- name: openebs-provisioner-hostpathimagePullPolicy: Alwaysimage: quay.io/openebs/provisioner-localpv:1.5.0————————————————————————————————————————————————

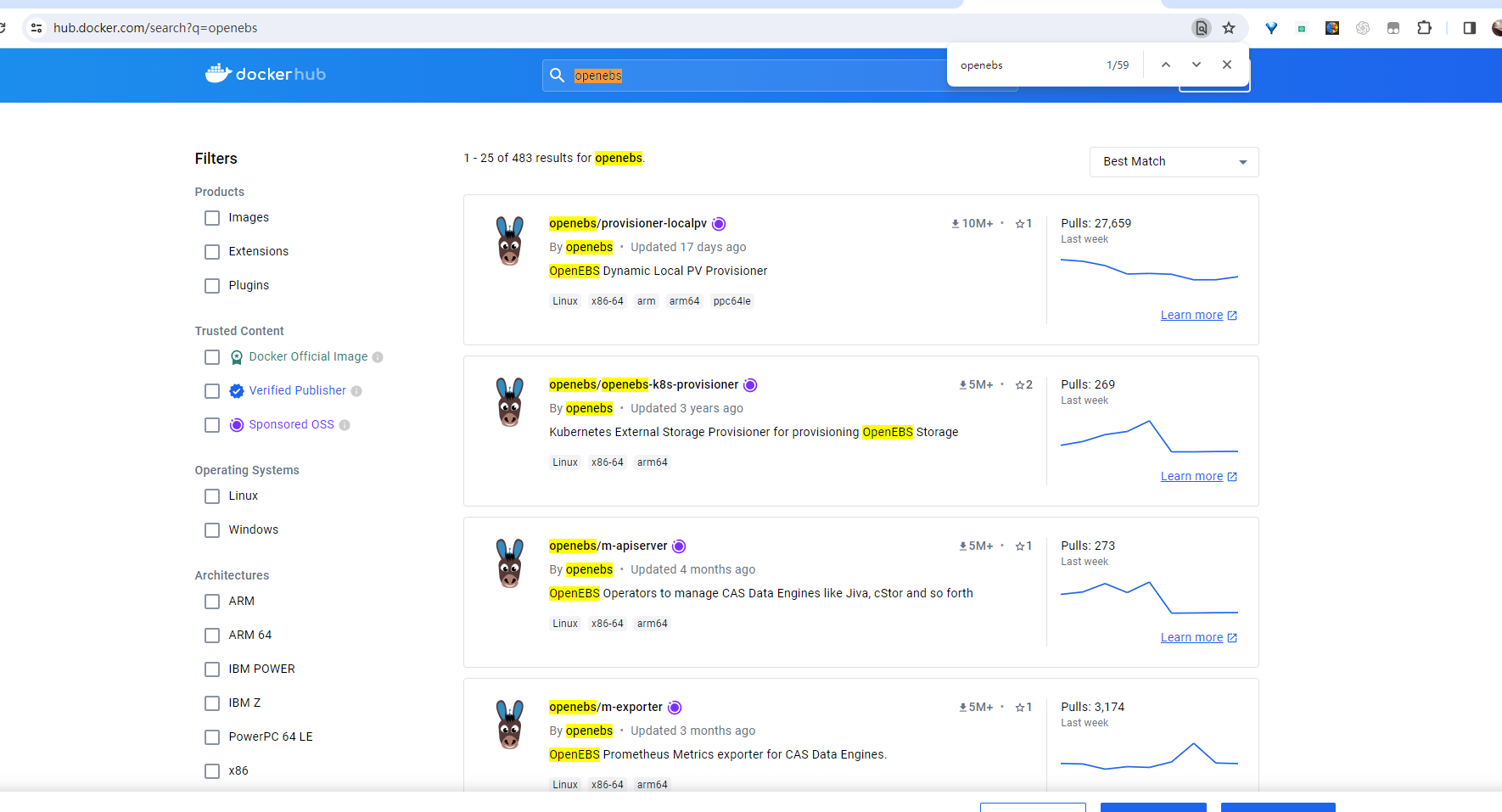

所以我们上 docker hub 网站上,看看有没有 openebs 的镜像。发现是有的。

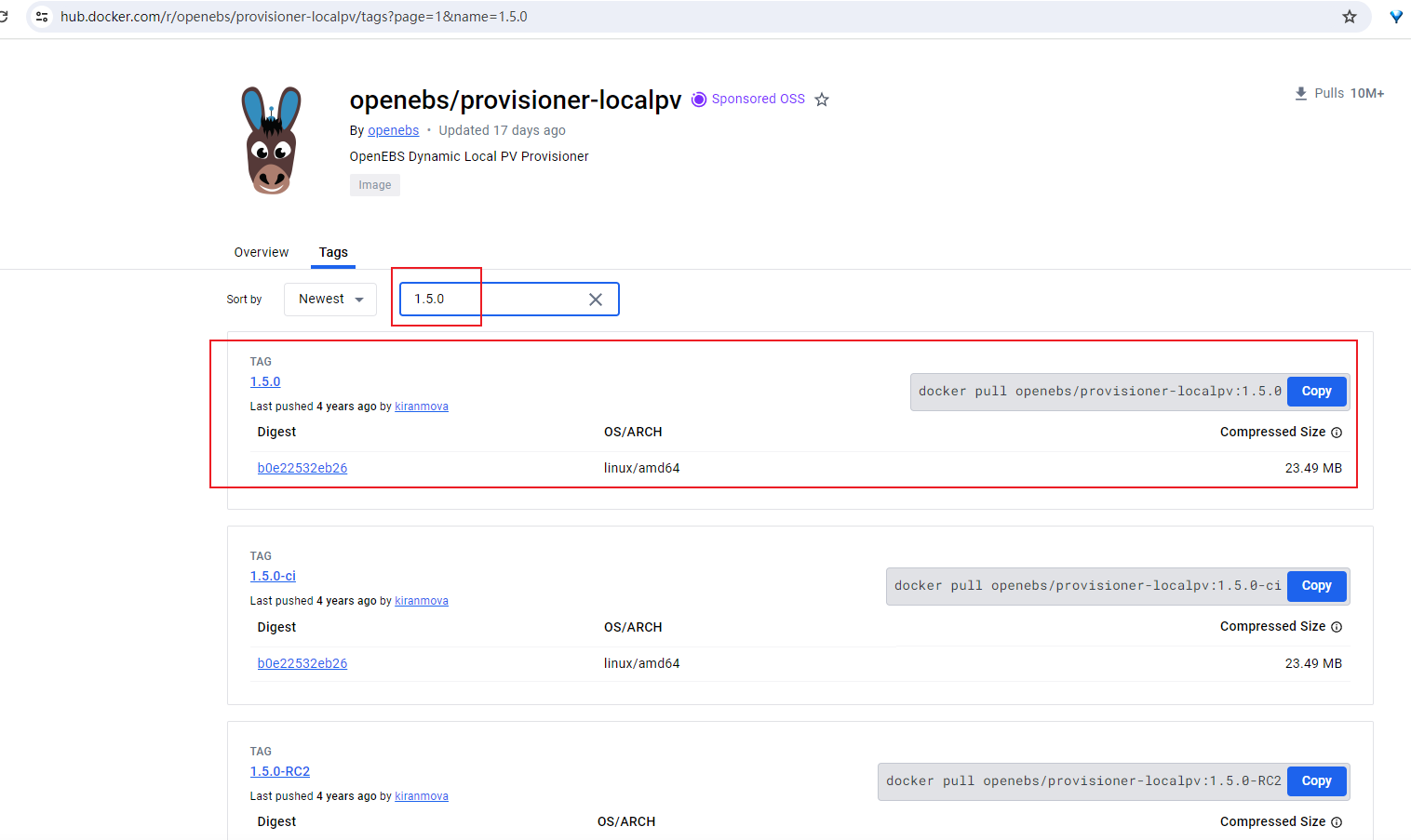

然后点进去一个看看有没有 1.5.0 版本的, 发现还是有的。

于是我们把前面 openebs-operator-1.5.0.yaml 要用到的镜像全部使用 docker pull 的方式拉取到本地。直接复制下面的命令拉取。到时候哪个拉取失败,再去 docker hub 看看是否有对应的镜像。

docker pull openebs/m-apiserver:1.5.0docker pull openebs/openebs-k8s-provisioner:1.5.0docker pull openebs/snapshot-controller:1.5.0docker pull openebs/node-disk-manager-amd64:v0.4.5docker pull openebs/node-disk-operator-amd64:v0.4.5docker pull openebs/admission-server:1.5.0docker pull openebs/provisioner-localpv:1.5.0

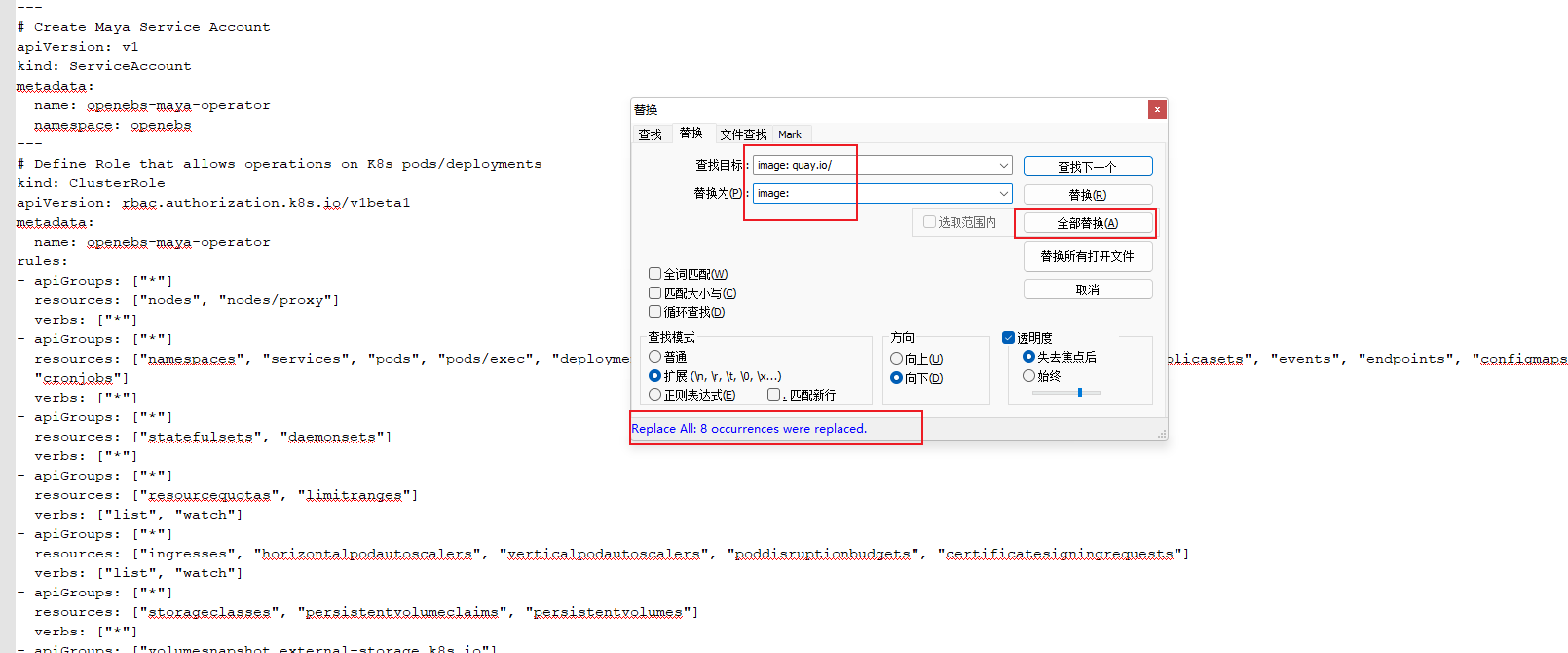

上面的镜像全部拉取到本地之后,就修改 openebs-operator-1.5.0.yaml 文件,将所有的 image: quay.io/ 替换为 image:

让它从本地拉取镜像。

修改之后的 openebs-operator-1.5.0.yaml 文件内容:

# This manifest deploys the OpenEBS control plane components, with associated CRs & RBAC rules

# NOTE: On GKE, deploy the openebs-operator.yaml in admin context# Create the OpenEBS namespace

apiVersion: v1

kind: Namespace

metadata:name: openebs

---

# Create Maya Service Account

apiVersion: v1

kind: ServiceAccount

metadata:name: openebs-maya-operatornamespace: openebs

---

# Define Role that allows operations on K8s pods/deployments

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: openebs-maya-operator

rules:

- apiGroups: ["*"]resources: ["nodes", "nodes/proxy"]verbs: ["*"]

- apiGroups: ["*"]resources: ["namespaces", "services", "pods", "pods/exec", "deployments", "deployments/finalizers", "replicationcontrollers", "replicasets", "events", "endpoints", "configmaps", "secrets", "jobs", "cronjobs"]verbs: ["*"]

- apiGroups: ["*"]resources: ["statefulsets", "daemonsets"]verbs: ["*"]

- apiGroups: ["*"]resources: ["resourcequotas", "limitranges"]verbs: ["list", "watch"]

- apiGroups: ["*"]resources: ["ingresses", "horizontalpodautoscalers", "verticalpodautoscalers", "poddisruptionbudgets", "certificatesigningrequests"]verbs: ["list", "watch"]

- apiGroups: ["*"]resources: ["storageclasses", "persistentvolumeclaims", "persistentvolumes"]verbs: ["*"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]resources: ["volumesnapshots", "volumesnapshotdatas"]verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["apiextensions.k8s.io"]resources: ["customresourcedefinitions"]verbs: [ "get", "list", "create", "update", "delete", "patch"]

- apiGroups: ["*"]resources: [ "disks", "blockdevices", "blockdeviceclaims"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpoolclusters", "storagepoolclaims", "storagepoolclaims/finalizers", "cstorpoolclusters/finalizers", "storagepools"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "castemplates", "runtasks"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpools", "cstorpools/finalizers", "cstorvolumereplicas", "cstorvolumes", "cstorvolumeclaims"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorpoolinstances", "cstorpoolinstances/finalizers"]verbs: ["*" ]

- apiGroups: ["*"]resources: [ "cstorbackups", "cstorrestores", "cstorcompletedbackups"]verbs: ["*" ]

- apiGroups: ["coordination.k8s.io"]resources: ["leases"]verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: ["admissionregistration.k8s.io"]resources: ["validatingwebhookconfigurations", "mutatingwebhookconfigurations"]verbs: ["get", "create", "list", "delete", "update", "patch"]

- nonResourceURLs: ["/metrics"]verbs: ["get"]

- apiGroups: ["*"]resources: [ "upgradetasks"]verbs: ["*" ]

---

# Bind the Service Account with the Role Privileges.

# TODO: Check if default account also needs to be there

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: openebs-maya-operator

subjects:

- kind: ServiceAccountname: openebs-maya-operatornamespace: openebs

roleRef:kind: ClusterRolename: openebs-maya-operatorapiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:name: maya-apiservernamespace: openebslabels:name: maya-apiserveropenebs.io/component-name: maya-apiserveropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: maya-apiserveropenebs.io/component-name: maya-apiserverreplicas: 1strategy:type: RecreaterollingUpdate: nulltemplate:metadata:labels:name: maya-apiserveropenebs.io/component-name: maya-apiserveropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: maya-apiserverimagePullPolicy: IfNotPresentimage: openebs/m-apiserver:1.5.0ports:- containerPort: 5656env:# OPENEBS_IO_KUBE_CONFIG enables maya api service to connect to K8s# based on this config. This is ignored if empty.# This is supported for maya api server version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"# OPENEBS_IO_K8S_MASTER enables maya api service to connect to K8s# based on this address. This is ignored if empty.# This is supported for maya api server version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://172.28.128.3:8080"# OPENEBS_NAMESPACE provides the namespace of this deployment as an# environment variable- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as# environment variable- name: OPENEBS_SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName# OPENEBS_MAYA_POD_NAME provides the name of this pod as# environment variable- name: OPENEBS_MAYA_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name# If OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG is false then OpenEBS default# storageclass and storagepool will not be created.- name: OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIGvalue: "true"# OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL decides whether default cstor sparse pool should be# configured as a part of openebs installation.# If "true" a default cstor sparse pool will be configured, if "false" it will not be configured.# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true- name: OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOLvalue: "false"# OPENEBS_IO_CSTOR_TARGET_DIR can be used to specify the hostpath# to be used for saving the shared content between the side cars# of cstor volume pod.# The default path used is /var/openebs/sparse#- name: OPENEBS_IO_CSTOR_TARGET_DIR# value: "/var/openebs/sparse"# OPENEBS_IO_CSTOR_POOL_SPARSE_DIR can be used to specify the hostpath# to be used for saving the shared content between the side cars# of cstor pool pod. This ENV is also used to indicate the location# of the sparse devices.# The default path used is /var/openebs/sparse#- name: OPENEBS_IO_CSTOR_POOL_SPARSE_DIR# value: "/var/openebs/sparse"# OPENEBS_IO_JIVA_POOL_DIR can be used to specify the hostpath# to be used for default Jiva StoragePool loaded by OpenEBS# The default path used is /var/openebs# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true#- name: OPENEBS_IO_JIVA_POOL_DIR# value: "/var/openebs"# OPENEBS_IO_LOCALPV_HOSTPATH_DIR can be used to specify the hostpath# to be used for default openebs-hostpath storageclass loaded by OpenEBS# The default path used is /var/openebs/local# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG# is set to true#- name: OPENEBS_IO_LOCALPV_HOSTPATH_DIR# value: "/var/openebs/local"- name: OPENEBS_IO_JIVA_CONTROLLER_IMAGEvalue: "quay.io/openebs/jiva:1.5.0"- name: OPENEBS_IO_JIVA_REPLICA_IMAGEvalue: "quay.io/openebs/jiva:1.5.0"- name: OPENEBS_IO_JIVA_REPLICA_COUNTvalue: "3"- name: OPENEBS_IO_CSTOR_TARGET_IMAGEvalue: "quay.io/openebs/cstor-istgt:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_IMAGEvalue: "quay.io/openebs/cstor-pool:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_MGMT_IMAGEvalue: "quay.io/openebs/cstor-pool-mgmt:1.5.0"- name: OPENEBS_IO_CSTOR_VOLUME_MGMT_IMAGEvalue: "quay.io/openebs/cstor-volume-mgmt:1.5.0"- name: OPENEBS_IO_VOLUME_MONITOR_IMAGEvalue: "quay.io/openebs/m-exporter:1.5.0"- name: OPENEBS_IO_CSTOR_POOL_EXPORTER_IMAGEvalue: "quay.io/openebs/m-exporter:1.5.0"- name: OPENEBS_IO_HELPER_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"# OPENEBS_IO_ENABLE_ANALYTICS if set to true sends anonymous usage# events to Google Analytics- name: OPENEBS_IO_ENABLE_ANALYTICSvalue: "true"- name: OPENEBS_IO_INSTALLER_TYPEvalue: "openebs-operator"# OPENEBS_IO_ANALYTICS_PING_INTERVAL can be used to specify the duration (in hours)# for periodic ping events sent to Google Analytics.# Default is 24h.# Minimum is 1h. You can convert this to weekly by setting 168h#- name: OPENEBS_IO_ANALYTICS_PING_INTERVAL# value: "24h"livenessProbe:exec:command:- /usr/local/bin/mayactl- versioninitialDelaySeconds: 30periodSeconds: 60readinessProbe:exec:command:- /usr/local/bin/mayactl- versioninitialDelaySeconds: 30periodSeconds: 60

---

apiVersion: v1

kind: Service

metadata:name: maya-apiserver-servicenamespace: openebslabels:openebs.io/component-name: maya-apiserver-svc

spec:ports:- name: apiport: 5656protocol: TCPtargetPort: 5656selector:name: maya-apiserversessionAffinity: None

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-provisionernamespace: openebslabels:name: openebs-provisioneropenebs.io/component-name: openebs-provisioneropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-provisioneropenebs.io/component-name: openebs-provisionerreplicas: 1strategy:type: RecreaterollingUpdate: nulltemplate:metadata:labels:name: openebs-provisioneropenebs.io/component-name: openebs-provisioneropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: openebs-provisionerimagePullPolicy: IfNotPresentimage: openebs/openebs-k8s-provisioner:1.5.0env:# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s# based on this address. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://10.128.0.12:8080"# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s# based on this config. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that provisioner should forward the volume create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"livenessProbe:exec:command:- pgrep- ".*openebs"initialDelaySeconds: 30periodSeconds: 60

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-snapshot-operatornamespace: openebslabels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatoropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatorreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-snapshot-operatoropenebs.io/component-name: openebs-snapshot-operatoropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: snapshot-controllerimage: openebs/snapshot-controller:1.5.0imagePullPolicy: IfNotPresentenv:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacelivenessProbe:exec:command:- pgrep- ".*controller"initialDelaySeconds: 30periodSeconds: 60# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that snapshot controller should forward the snapshot create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"- name: snapshot-provisionerimage: openebs/snapshot-provisioner:1.5.0imagePullPolicy: IfNotPresentenv:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,# that snapshot provisioner should forward the clone create/delete requests.# If not present, "maya-apiserver-service" will be used for lookup.# This is supported for openebs provisioner version 0.5.3-RC1 onwards#- name: OPENEBS_MAYA_SERVICE_NAME# value: "maya-apiserver-apiservice"livenessProbe:exec:command:- pgrep- ".*provisioner"initialDelaySeconds: 30periodSeconds: 60

---

# This is the node-disk-manager related config.

# It can be used to customize the disks probes and filters

apiVersion: v1

kind: ConfigMap

metadata:name: openebs-ndm-confignamespace: openebslabels:openebs.io/component-name: ndm-config

data:# udev-probe is default or primary probe which should be enabled to run ndm# filterconfigs contails configs of filters - in their form fo include# and exclude comma separated stringsnode-disk-manager.config: |probeconfigs:- key: udev-probename: udev probestate: true- key: seachest-probename: seachest probestate: false- key: smart-probename: smart probestate: truefilterconfigs:- key: os-disk-exclude-filtername: os disk exclude filterstate: trueexclude: "/,/etc/hosts,/boot"- key: vendor-filtername: vendor filterstate: trueinclude: ""exclude: "CLOUDBYT,OpenEBS"- key: path-filtername: path filterstate: trueinclude: ""exclude: "loop,/dev/fd0,/dev/sr0,/dev/ram,/dev/dm-,/dev/md"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: openebs-ndmnamespace: openebslabels:name: openebs-ndmopenebs.io/component-name: ndmopenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-ndmopenebs.io/component-name: ndmupdateStrategy:type: RollingUpdatetemplate:metadata:labels:name: openebs-ndmopenebs.io/component-name: ndmopenebs.io/version: 1.5.0spec:# By default the node-disk-manager will be run on all kubernetes nodes# If you would like to limit this to only some nodes, say the nodes# that have storage attached, you could label those node and use# nodeSelector.## e.g. label the storage nodes with - "openebs.io/nodegroup"="storage-node"# kubectl label node <node-name> "openebs.io/nodegroup"="storage-node"#nodeSelector:# "openebs.io/nodegroup": "storage-node"serviceAccountName: openebs-maya-operatorhostNetwork: truecontainers:- name: node-disk-managerimage: openebs/node-disk-manager-amd64:v0.4.5imagePullPolicy: AlwayssecurityContext:privileged: truevolumeMounts:- name: configmountPath: /host/node-disk-manager.configsubPath: node-disk-manager.configreadOnly: true- name: udevmountPath: /run/udev- name: procmountmountPath: /host/procreadOnly: true- name: sparsepathmountPath: /var/openebs/sparseenv:# namespace in which NDM is installed will be passed to NDM Daemonset# as environment variable- name: NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# pass hostname as env variable using downward API to the NDM container- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName# specify the directory where the sparse files need to be created.# if not specified, then sparse files will not be created.- name: SPARSE_FILE_DIRvalue: "/var/openebs/sparse"# Size(bytes) of the sparse file to be created.- name: SPARSE_FILE_SIZEvalue: "10737418240"# Specify the number of sparse files to be created- name: SPARSE_FILE_COUNTvalue: "0"livenessProbe:exec:command:- pgrep- ".*ndm"initialDelaySeconds: 30periodSeconds: 60volumes:- name: configconfigMap:name: openebs-ndm-config- name: udevhostPath:path: /run/udevtype: Directory# mount /proc (to access mount file of process 1 of host) inside container# to read mount-point of disks and partitions- name: procmounthostPath:path: /proctype: Directory- name: sparsepathhostPath:path: /var/openebs/sparse

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-ndm-operatornamespace: openebslabels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatoropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatorreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-ndm-operatoropenebs.io/component-name: ndm-operatoropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: node-disk-operatorimage: openebs/node-disk-operator-amd64:v0.4.5imagePullPolicy: AlwaysreadinessProbe:exec:command:- stat- /tmp/operator-sdk-readyinitialDelaySeconds: 4periodSeconds: 10failureThreshold: 1env:- name: WATCH_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name# the service account of the ndm-operator pod- name: SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName- name: OPERATOR_NAMEvalue: "node-disk-operator"- name: CLEANUP_JOB_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-admission-servernamespace: openebslabels:app: admission-webhookopenebs.io/component-name: admission-webhookopenebs.io/version: 1.5.0

spec:replicas: 1strategy:type: RecreaterollingUpdate: nullselector:matchLabels:app: admission-webhooktemplate:metadata:labels:app: admission-webhookopenebs.io/component-name: admission-webhookopenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: admission-webhookimage: openebs/admission-server:1.5.0imagePullPolicy: IfNotPresentargs:- -alsologtostderr- -v=2- 2>&1env:- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: ADMISSION_WEBHOOK_NAMEvalue: "openebs-admission-server"

---

apiVersion: apps/v1

kind: Deployment

metadata:name: openebs-localpv-provisionernamespace: openebslabels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisioneropenebs.io/version: 1.5.0

spec:selector:matchLabels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisionerreplicas: 1strategy:type: Recreatetemplate:metadata:labels:name: openebs-localpv-provisioneropenebs.io/component-name: openebs-localpv-provisioneropenebs.io/version: 1.5.0spec:serviceAccountName: openebs-maya-operatorcontainers:- name: openebs-provisioner-hostpathimagePullPolicy: Alwaysimage: openebs/provisioner-localpv:1.5.0env:# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s# based on this address. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_K8S_MASTER# value: "http://10.128.0.12:8080"# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s# based on this config. This is ignored if empty.# This is supported for openebs provisioner version 0.5.2 onwards#- name: OPENEBS_IO_KUBE_CONFIG# value: "/home/ubuntu/.kube/config"- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: OPENEBS_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as# environment variable- name: OPENEBS_SERVICE_ACCOUNTvalueFrom:fieldRef:fieldPath: spec.serviceAccountName- name: OPENEBS_IO_ENABLE_ANALYTICSvalue: "true"- name: OPENEBS_IO_INSTALLER_TYPEvalue: "openebs-operator"- name: OPENEBS_IO_HELPER_IMAGEvalue: "quay.io/openebs/linux-utils:1.5.0"livenessProbe:exec:command:- pgrep- ".*localpv"initialDelaySeconds: 30periodSeconds: 60

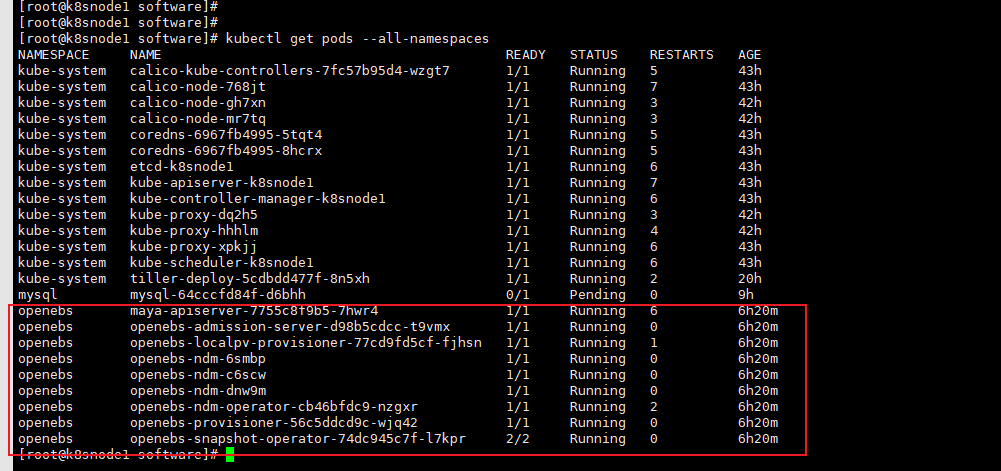

---上面的步骤完成后,使用 kubectl apply 命令安装。

[root@k8snode1 software]# kubectl apply -f openebs-operator-1.5.0.yaml

namespace/openebs created

serviceaccount/openebs-maya-operator created

clusterrole.rbac.authorization.k8s.io/openebs-maya-operator created

clusterrolebinding.rbac.authorization.k8s.io/openebs-maya-operator created

deployment.apps/maya-apiserver created

service/maya-apiserver-service created

deployment.apps/openebs-provisioner created

deployment.apps/openebs-snapshot-operator created

configmap/openebs-ndm-config created

daemonset.apps/openebs-ndm created

deployment.apps/openebs-ndm-operator created

deployment.apps/openebs-admission-server created

deployment.apps/openebs-localpv-provisioner created

但是,使用 kubectl get pods --all-namespaces 查看,发现镜像还是报错ImagePullBackOff或者ErrImagePull 。

原因可以参考一下这篇文章《k8s所在的节点docker有镜像 为什么还要从远程拉取》。

因我只在 master 节点拉取了这些镜像,没有在 node 节点拉取,把上面的镜像再拉取一遍到 node 节点上就行了。

介绍另一种方式:

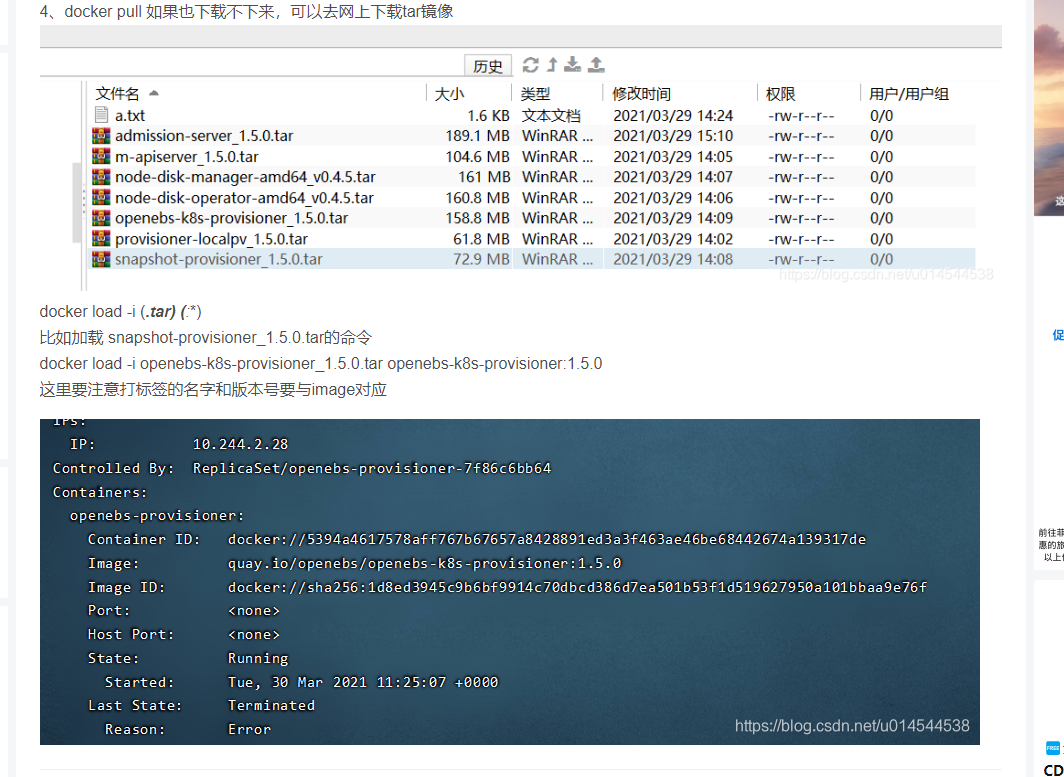

因为我昨天访问 docker hub, 结果 docker hub 也访问不了。即 docker pull 也拉取不了镜像的时候,我们可以在网上找到对应的 tar 镜像,使用 docker load 命令将 tar 镜像拉取到本地。

例子:

docker load -i openebs-k8s-provisioner_1.5.0.tar openebs-k8s-provisioner:1.5.0

可以参考这篇文章

最终效果:

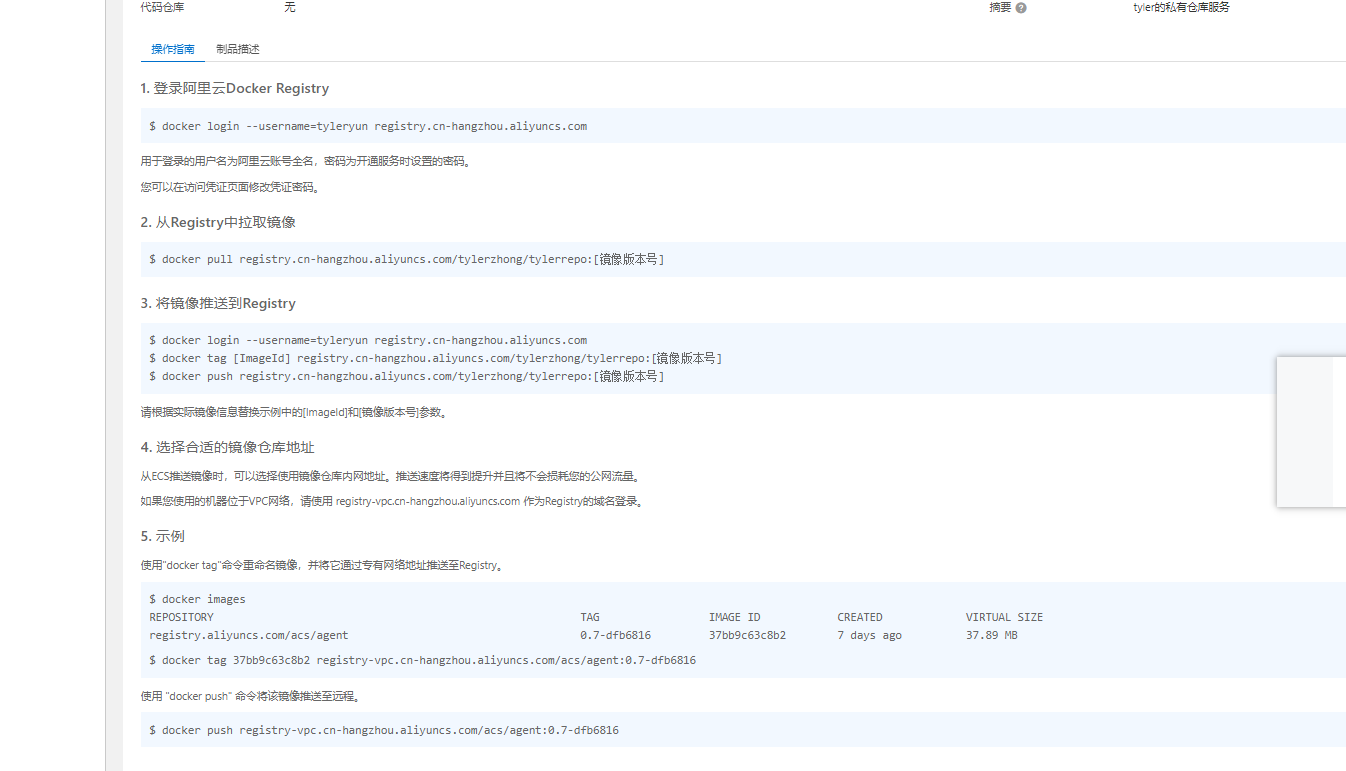

最后,也可以将自己拉取下来的镜像推送到阿里云上去:

参考 阿里云文档

这篇关于安装OpenEBS,镜像总是报错ImagePullBackOff或者ErrImagePull的解决方法的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!