本文主要是介绍问题 HBase RegionServer频繁挂掉,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

错误日志

2019-09-21 20:42:17,264 INFO org.apache.hadoop.hbase.ScheduledChore: Chore: CompactionChecker missed its start time

2019-09-21 20:42:17,273 WARN org.apache.hadoop.hbase.util.JvmPauseMonitor: Detected pause in JVM or host machine (eg GC): pause of approximately 156013ms

GC pool 'ParNew' had collection(s): count=1 time=156080ms

2019-09-21 20:42:17,264 WARN org.apache.hadoop.hbase.util.Sleeper: We slept 158843ms instead of 3000ms, this is likely due to a long garbage collecting pause and it's usually bad, see http://hbase.apache.org/book.html#trouble.rs.runtime.zkexpired

2019-09-21 20:42:17,281 WARN org.apache.hadoop.hbase.ipc.RpcServer: (responseTooSlow): {"call":"Scan(org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ScanRequest)","starttimems":1569069581136,"responsesize":2051,"method":"Scan","processingtimems":156145,"client":"10.97.202.19:58322","queuetimems":0,"class":"HRegionServer"}

2019-09-21 20:42:17,300 FATAL org.apache.hadoop.hbase.regionserver.HRegionServer: ABORTING region server hdh19,60020,1568940808648: org.apache.hadoop.hbase.YouAreDeadException: Server REPORT rejected; currently processing hdh19,60020,1568940808648 as dead serverat org.apache.hadoop.hbase.master.ServerManager.checkIsDead(ServerManager.java:426)at org.apache.hadoop.hbase.master.ServerManager.regionServerReport(ServerManager.java:331)at org.apache.hadoop.hbase.master.MasterRpcServices.regionServerReport(MasterRpcServices.java:345)at org.apache.hadoop.hbase.protobuf.generated.RegionServerStatusProtos$RegionServerStatusService$2.callBlockingMethod(RegionServerStatusProtos.java:8617)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2170)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:109)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:185)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:165)org.apache.hadoop.hbase.YouAreDeadException: org.apache.hadoop.hbase.YouAreDeadException: Server REPORT rejected; currently processing hdh19,60020,1568940808648 as dead serverat org.apache.hadoop.hbase.master.ServerManager.checkIsDead(ServerManager.java:426)at org.apache.hadoop.hbase.master.ServerManager.regionServerReport(ServerManager.java:331)at org.apache.hadoop.hbase.master.MasterRpcServices.regionServerReport(MasterRpcServices.java:345)at org.apache.hadoop.hbase.protobuf.generated.RegionServerStatusProtos$RegionServerStatusService$2.callBlockingMethod(RegionServerStatusProtos.java:8617)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2170)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:109)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:185)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:165)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:95)at org.apache.hadoop.hbase.protobuf.ProtobufUtil.getRemoteException(ProtobufUtil.java:327)at org.apache.hadoop.hbase.regionserver.HRegionServer.tryRegionServerReport(HRegionServer.java:1158)at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:966)at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.YouAreDeadException): org.apache.hadoop.hbase.YouAreDeadException: Server REPORT rejected; currently processing hdh19,60020,1568940808648 as dead server

......

2019-09-21 20:42:17,621 INFO org.apache.zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x86cf6a57553f9a7 has expired, closing socket connection

2019-09-21 20:42:17,621 FATAL org.apache.hadoop.hbase.regionserver.HRegionServer: ABORTING region server hdh19,60020,1568940808648: regionserver:60020-0x86cf6a57553f9a7, quorum=hdh12:2181,hdh53:2181,hdh1-07.p.xyidc:2181,hdh52:2181,hdh1-10.p.xyidc:2181, baseZNode=/hbase regionserver:60020-0x86cf6a57553f9a7 received expired from ZooKeeper, aborting

org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expiredat org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.connectionEvent(ZooKeeperWatcher.java:700)at org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.process(ZooKeeperWatcher.java:611)at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:522)at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:498)

2019-09-21 20:42:42,269 ERROR org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper: ZooKeeper getChildren failed after 4 attempts

2019-09-21 20:42:42,269 WARN org.apache.hadoop.hbase.zookeeper.ZKUtil: regionserver:60020-0x86cf6a57553f9a7, quorum=hdh12:2181,hdh53:2181,hdh1-07.p.xyidc:2181,hdh52:2181,hdh1-10.p.xyidc:2181, baseZNode=/hbase Unable to list children of znode /hbase/replication/rs/hdh19,60020,1568940808648

org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/replication/rs/hdh19,60020,1568940808648at org.apache.zookeeper.KeeperException.create(KeeperException.java:127)at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1468)at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getChildren(RecoverableZooKeeper.java:295)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchForNewChildren(ZKUtil.java:456)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchThem(ZKUtil.java:484)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenBFSAndWatchThem(ZKUtil.java:1476)at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNodeRecursivelyMultiOrSequential(ZKUtil.java:1398)at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNodeRecursively(ZKUtil.java:1280)at org.apache.hadoop.hbase.replication.ReplicationQueuesZKImpl.removeAllQueues(ReplicationQueuesZKImpl.java:187)at org.apache.hadoop.hbase.replication.regionserver.ReplicationSourceManager.join(ReplicationSourceManager.java:310)at org.apache.hadoop.hbase.replication.regionserver.Replication.join(Replication.java:180)at org.apache.hadoop.hbase.replication.regionserver.Replication.stopReplicationService(Replication.java:172)at org.apache.hadoop.hbase.regionserver.HRegionServer.stopServiceThreads(HRegionServer.java:2162)at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:1088)at java.lang.Thread.run(Thread.java:748)

2019-09-21 20:42:42,270 ERROR org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher: regionserver:60020-0x86cf6a57553f9a7, quorum=hdh12:2181,hdh53:2181,hdh1-07.p.xyidc:2181,hdh52:2181,hdh1-10.p.xyidc:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception

org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/replication/rs/hdh19,60020,1568940808648at org.apache.zookeeper.KeeperException.create(KeeperException.java:127)at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1468)at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getChildren(RecoverableZooKeeper.java:295)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchForNewChildren(ZKUtil.java:456)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchThem(ZKUtil.java:484)at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenBFSAndWatchThem(ZKUtil.java:1476)at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNodeRecursivelyMultiOrSequential(ZKUtil.java:1398)at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNodeRecursively(ZKUtil.java:1280)at org.apache.hadoop.hbase.replication.ReplicationQueuesZKImpl.removeAllQueues(ReplicationQueuesZKImpl.java:187)at org.apache.hadoop.hbase.replication.regionserver.ReplicationSourceManager.join(ReplicationSourceManager.java:310)at org.apache.hadoop.hbase.replication.regionserver.Replication.join(Replication.java:180)

通过线上日志可以看到 hbase由于GC时间较长,zk服务自动剔除该hbase节点,关闭当前连接,这种情况下,hbase框架选择停止了不能连接到zookeeper的 hbase regionserver,因为请求到这个超时节点的请求可能已经转到其他的节点。

解决方法

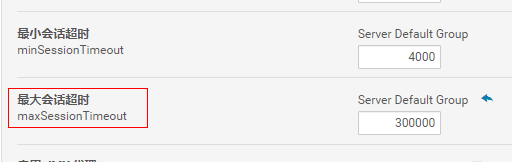

提高hbase zk的超时时间

只设置hbase的超时时间是不够的的,还需要设置zk的最大超时时间

这篇关于问题 HBase RegionServer频繁挂掉的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!