本文主要是介绍CLR Threadpool 的内部机制,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

转自:New and Improved CLR 4 Thread Pool Engine

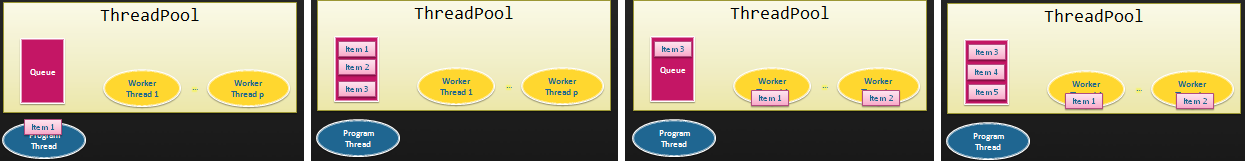

A way to think of the CLR thread pool and how it gets used, e.g. when you call ThreadPool.QueueUserWorkItem, is to picture a global queue where work items (essentially delegates) get queued on a global queue and multiple threads pick them out in a First In First Out order. The FIFO order is not something that is documented or guaranteed, but my personal guess is that too many applications rely on it, so I don’t see it changing any time soon.

The image on the left shows the main program thread as it is creating a work item; the second image shows the global thread pool queue after code has queued 3 work items; the third image shows 2 threads from the thread pool that have grabbed 2 work items and executing them. If in the context of those work items (i.e. from the executing code in the delegates) more work items get created for the CLR thread pool they end up on the global queue (see image on the right) and life goes on.

CLR Thread Pool v4 from a System.Threading.Tasks perspective

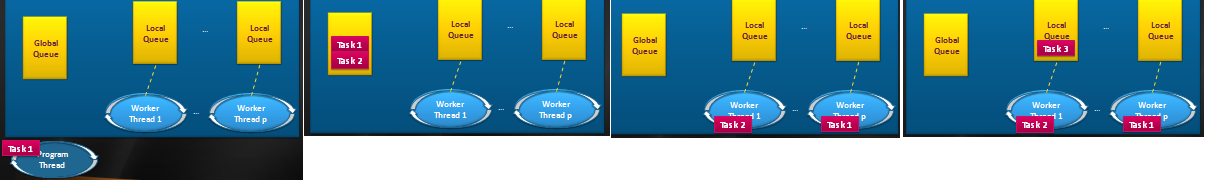

In CLR 4, the thread pool engine has had some improvements made to it (it has been having positive tweaks in every release of the CLR) and part of these improvements are some performance gains that are achievable when using the new System.Threading.Tasks.Task type. I'll show a code example in another post, but you can think of creating and starting a Task (passing it a delegate) as the equivalent of calling QueueUserWorkItem on the ThreadPool. A way to visualize the CLR thread pool when used via the Task-based API is that, in addition to the single global queue, each thread in the thread pool has its own local queue:

Just as with normal thread pool usage, the main program thread may create Tasks that will get queued on the global queue (e.g. Task1 and Task2) and threads will grab those Tasks typically in a FIFO manner. Where things diverge is that any new Tasks (e.g. Task3) created in the context of the executing Task (e.g. Task2) end up on a local queue for that thread pool thread.

Why Local Queues

With the era of manycore machines upon us and devs taking advantage of parallelism, the number of threads in the thread pool is likely to increase: at a minimum equal to the number of cores for compute bound operations, and likely more due to injection of additional threads as a result of IO bound operations or blocking calls stalling the CPU. Bottom line: more cores = more threads.

With more threads competing for work items, it is not optimal to put up with the contention issues of a single queue that all of them are trying to access safely. This would be amplified by the goal of fine grained parallelism where each work item finishes fairly quickly, so the trips to the global queue would be frequent.

It is for this reason we introduce a local queue per thread, where additional tasks get queued (with no contention) and will then be retrieved by that same thread (again with no contention).

LIFO

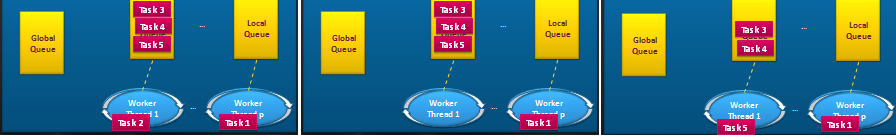

So, picking up from the picture further up, let's assume that Task2 additionally creates two more Tasks e.g. Task4 and Task5.

The tasks end up on the local queue as expected, but which Task does the thread pick to execute when it completes its current task (i.e. Task2)? The initially surprising answer is that it could be Task5, which is the last one that was queued – in other words a LIFO algorithm can be used for the local queues.

The reason that this is a good thing is locality. In most scenarios the data required by the last created Task in the queue is still hot in the cache, so it makes sense to pull that down and execute it. Obviously, this means there are no promises on ordering, but some level of fairness is relinquished in the sake of better performance.

Work Stealing

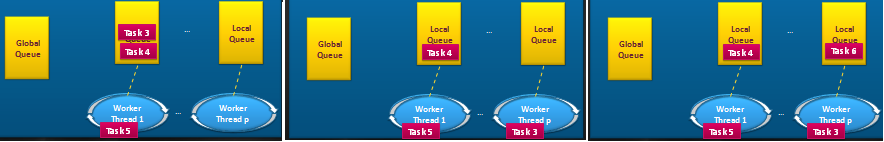

If the only enhancements were the introduction of LIFO local queues then performance is greatly increased, but you should be concerned. You should be concerned about what happens when another thread in the thread pool (likely executing on another core) finishes its work. Luckily, you don't have to be:

The other worker thread completes Task1 and then goes to its local queue and finds it empty; it then goes to the global queue and finds it empty. We don't want it sitting there idle so a beautiful thing happens: work stealing. The thread goes to a local queue of another thread and "steals" a Task and executes it! That way we keep all our cores busy and this contributes to our fine grained parallelism load balancing goal. In the image above notice that "stealing" happens in a FIFO manner, which again for locality reasons is good (its data would be cold in the cache). Furthermore, in many divide and conquer scenarios, tasks generated earlier on are likely to generate more work (e.g. Task6) themselves, which would end up now on this other thread's queue and hence reduce frequent stealing.

Nitpicking note: further up, I mentioned "no contention"; clearly work stealing is the exception to that rule.

What's Next

In addition to the links sprinkled above, you can find a simple implementation of a work stealing threadpool on Joe's blog post and some of the concepts above are touched on in this MSDN mag article by Eric and Erika. If you want to see the pictures above in a fully animated slide, get my PDC deck (slide 8) from this page. Do note that the implementation I am talking about above did not ship with the September CTP of Visual Studio 2010, but is slated for the next drop. Also note that all internal implementation details are subject to change and just shared here for the geek factor ;-)

So you can see how using the Task-based API will yield cool perf gains under the covers. An additional reason to use the new Task-based API is because of its richness. I'll touch on that in a next blog post.

这篇关于CLR Threadpool 的内部机制的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!